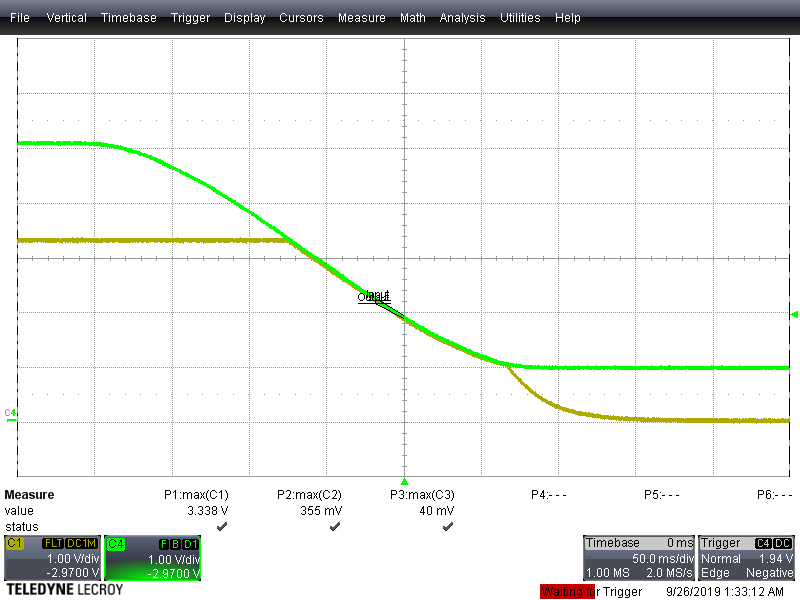

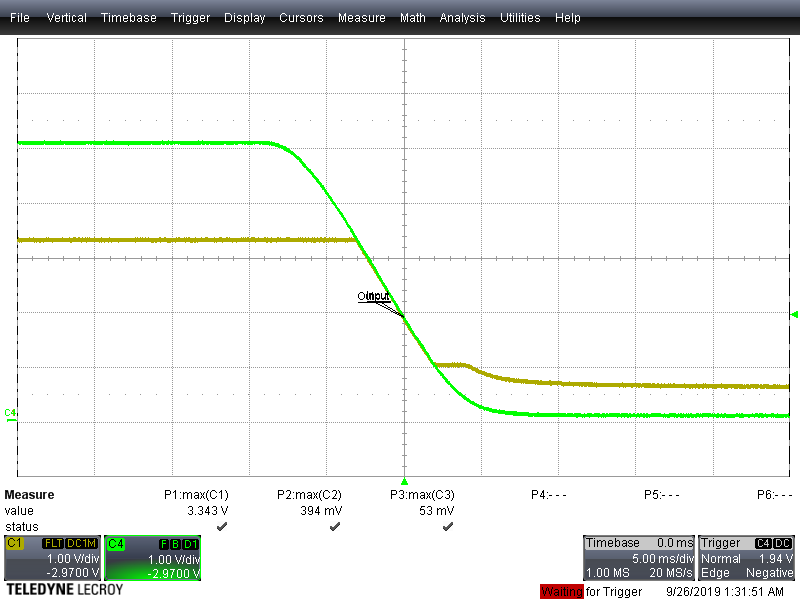

This is not my expertise so please bear with me... I am operating at 3.3V output with a 3.5V input. I probe the IN and OUT pins during a power cycle (ON...OFF...ON...OFF). During the first OFF, the OUT pins maintain ~500mV for 10 seconds before decaying to zero. If I power ON during that 10 second window, the LDO drops to 0 V instead of supplying 3.3V. I am following the circuit topology in the datasheet for ANY-OUT operation at 3.3V (Fig. 43). Just trying to understand what would pull the output down when I am seeing +3.5 at the input and why this is triggered while the output is maintaining at 500mV.

The power supply is steadily decaying when switched off and the LDO maintains at 500mV until the supply line decays past about 280mV.

I could put in a bleed resistor to speed the capacitor discharge but want to better understand what is happening here.

Thank you!