Other Parts Discussed in Thread: GPCCEDV, BQ78350-R1, BQ78350

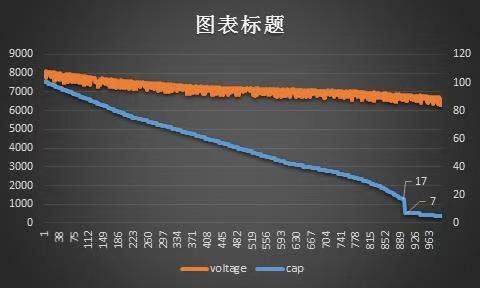

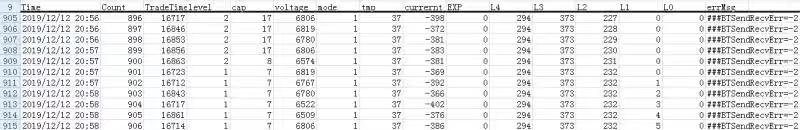

Hi, my customer is using BQ4050 for 2 series battery pack gauging, the capacity jumps from 17% to 7%.

The load doesn't increase or decrease apparently, shown below:

what may cause such jump?

what information we need to provide to find the cause of this jump?