Other Parts Discussed in Thread: GPCCHEM, EV2400, BQSTUDIO, BQ27Z561-R1,

Hello,

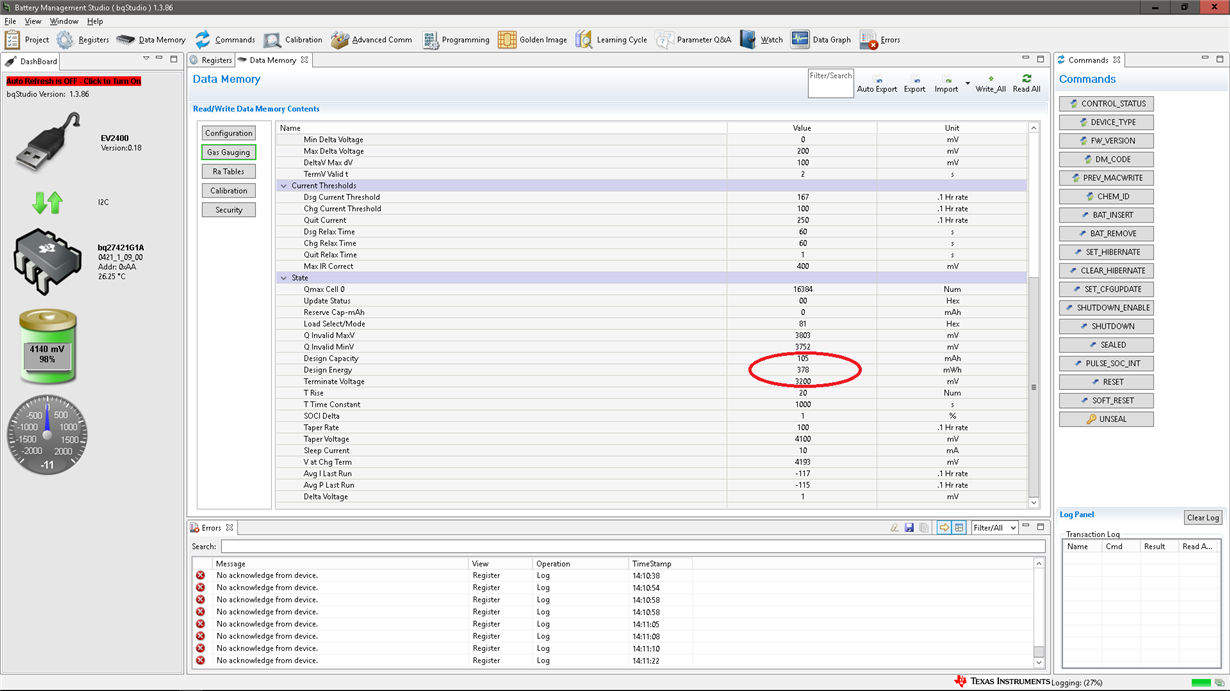

I'm using a bq27421-G1A fuel gauge in a n embedded project.

The battery is a LiPo with 4.2V maximum charge (3.7V discharge) and 110 mAh (407 mWh) capacity. The terminate voltage of our design is 3.2 V.

Capacity, energy, and terminate voltage have been programmed to the fuel gauge via I2C. We didn't explicitely set the taper rate as the default is fine for us. We've also re-read them to verify that the values were set correctly.

After programming, we completely de- and recharged the battery one time as suggested by the documentation.

In the next decharge process, we continuously read the Voltage, SOC and average current from the fuel gauge and put them in a table:

Voltage is scaled on the left side of the diagram, the other values on the right (so SOC dropped from 96 to 2).

While the power consuption of the device was onstant most of the time and the voltage dropped more or less linary, the SOC dropped to fast in the middle of the recharge and nearly didn't change in the last third. Considering that the battery wasn't empty when we stopped the measuring (as terminate voltage was set to 3.2V), the SOC was at or below 10% when the battery was actually half full.

At charging, we got a similar table:

Scaling as above.

At the start, there were some funny readings I'm willing to ignore. After that, while the (loading) current was again quite stable and the voltage more or less linear, the SOC again shows a wrong behaviour, as it rises to slow in the first half and then does a sprint to catch up.

I know that the fuel gauge doesn't use the voltage to determine SOC but internal math and simulations. Unfortunately, these simulations don't seem to get it right in my case. Do you have any ideas what might have gone wrong here?

Regards,

Lasse