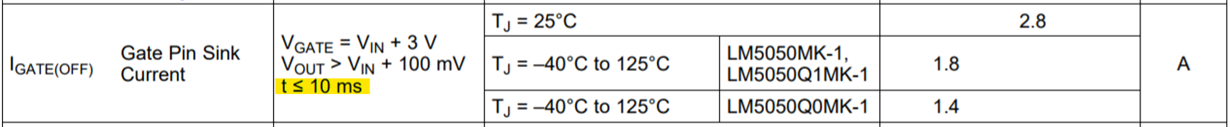

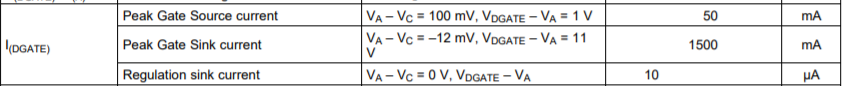

I have a design challenge where I would like to use the functionality of the LM5050 as an ideal-diode controller, but I would like to greatly decrease the turn-on time. The design already has a +12V floating supply above the primary bus voltage. My thought is to connect this +12V floating supply to the GATE pin via a 1k resistor. When the LM5050 is OFF, it can sink up to 2A, and should be able to handle the 12mA coming from the floating supply bias. When the LM5050 turns on, the MOSFET gate then becomes primarily driven by the 1k resistor, rather than the internal charge pump of the LM5050. I have simulated this with three high-power MOSFETS in parallel (implying requiring lots of gate charge to fully turn on) and I decreased the turn-on time from 3mS to 100uS. The simulation also shows that the nominal 20mV of servo-voltage across Vds is reduced, since at least in simulation the LM5050 does not appear to try and sink current into GATE in order to maintain the 20mV drop it normally servos to maintain. Reverse protection appears to operate as expected.

Is there anything I am missing that would make a circuit like this unadvisable?

EDIT: I should add that there will be current-monitoring in-line with this circuit, and for reasons relating to the rest of the design, the LM5050 will be commanded OFF when the current falls to less than 3A. So the reverse-polarity protection comes as a side-effect of external circuitry. Otherwise, yes, the hard-biased on MOSFET would carry a lot of reverse current to get to the -28mV threshold of the LM5050 to shut off the gate. In simulation using TINA, this design notionally turns off the MOSFET about 500ns sooner than the bare LM5050 reaching -28mV bias before turning off, for a 100uS external surge event.

Thanks

Will