Other Parts Discussed in Thread: BQ34Z100

Hello, I am working on a battery project and I am using a BQ34Z100 fuel gauge. My design uses a pack made of 6S 8000mAh cells.

My board uses a 1mOhm shunt, which is lower than recommended for this part, but is used for example in this application: www.ti.com/.../tidueg7b.pdf and is required for my current levels.

I've done the following so far:

Configured the parameters as described in the setup guide in section 8.2.2. In particular, I changed the following:

Design Capacity: I am using 4x current scaling, so my capacity is 2000mAh

Design Energy: My 4x current scaling gives 2000mAh * 3.6V * 6cells = 43200mWh, and I have scaled this by 10, so the actual value entered is 4320.

Number of Series Cells: 6

VOLSEL set to 1

Flash Update OK Cell Volt = 2400mV

Load Select: 3 (My application sometimes uses continuous slow rate discharge of about 1/8C or dynamic loading with avg of 2C, so the current average could be the best fit?)

Load Mode: 0

Cell Terminate Voltage: 3000mV

Taper Current: 100mA (400mA = C/20)

CC Threshold: 1800mAh (7200mAh = 90% of design cap)

Pack Configuration: 09d9

Pack Configuration B: af

Pack Configuration C: 37

FS Wait: 10 sec (I am looking to minimize quiescent current so I command the gauge to go to full sleep whenever battery is not plugged in)

QMax: 2070 (I set to 2000mAh at the start and I think this was updated automatically)

Deadband: 30 mAmp ( I raised this from the 5mA default because I think this was causing some problems with packs varying in SoC while sitting asleep, etc) I noticed the 13S reference design mentioned above set it to 15mA, and other posts recommend changing this to be higher for low value shunt R designs.

I also changed these to the value recommended by section 8.2.4 since my gauge is always attached to the battery

Max Res Factor 15

Min Res Factor 3

Max Res Scale 5000

Min Res Scale 200

Max QMAX Change 30

Chemistry is 2145, selected by using the GPCHEM tool and process, with a good match of 1.59% Max DOD Error %.

Other params I am thinking about changing are:

FC Set %: 100 Percent. I think I should change this to -1 to make it use the actual current taper as opposed to 100% SoC.

Design Resistance: 42mOhm default. Should I change this?

Load Select: was thinking about changing this back to 1 (default). How much does this matter?

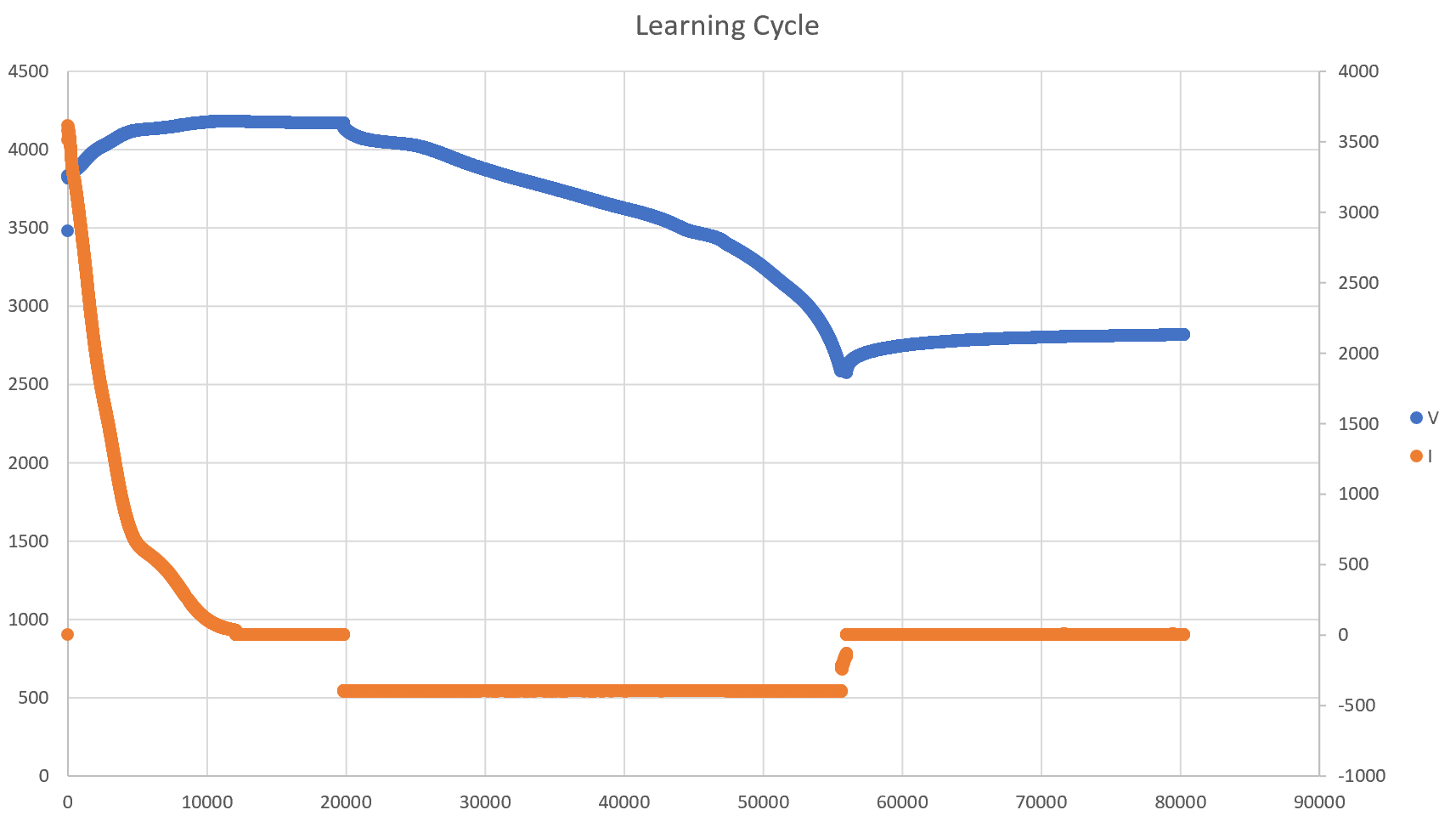

I then did a learning cycle, which finished properly and learned state 6 is achieved.

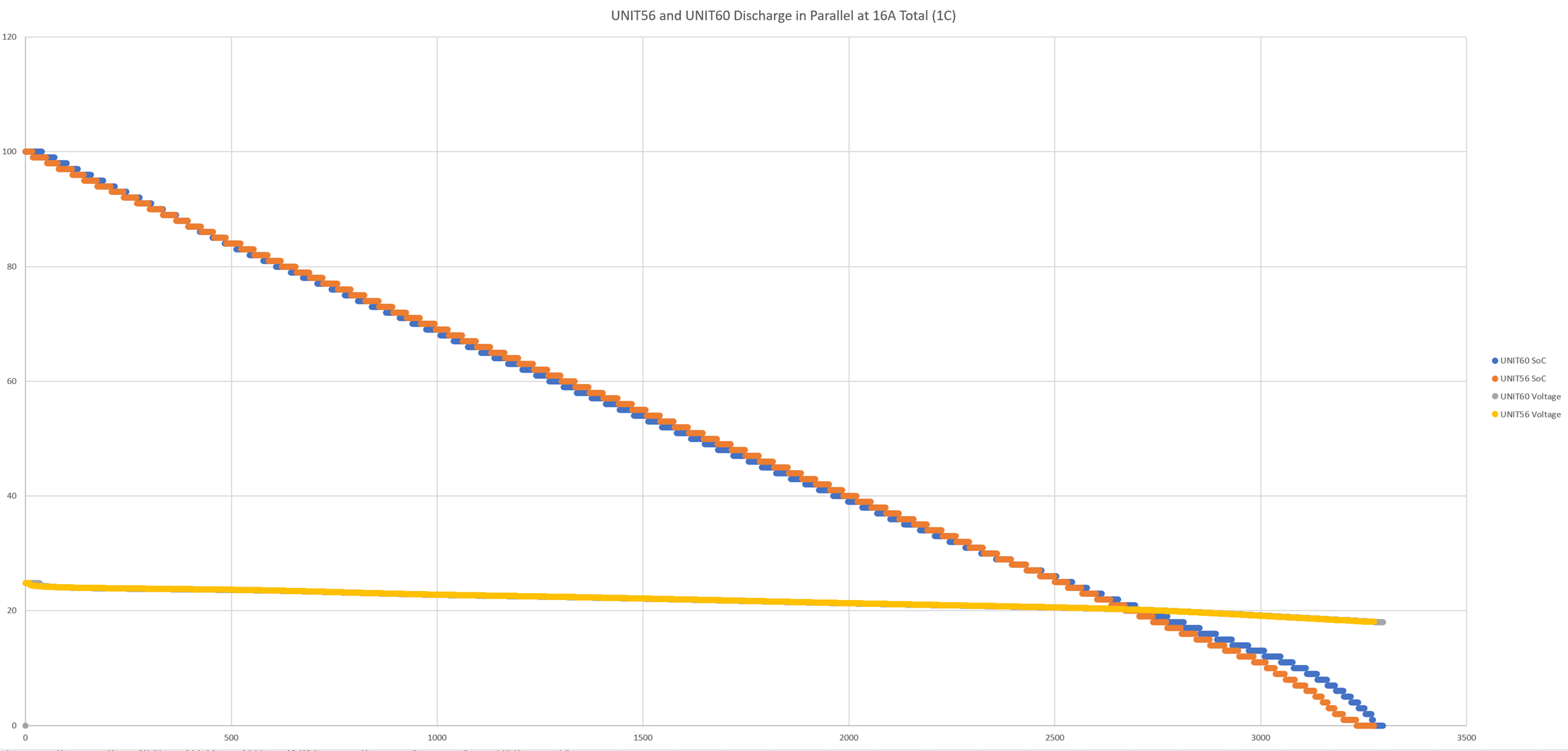

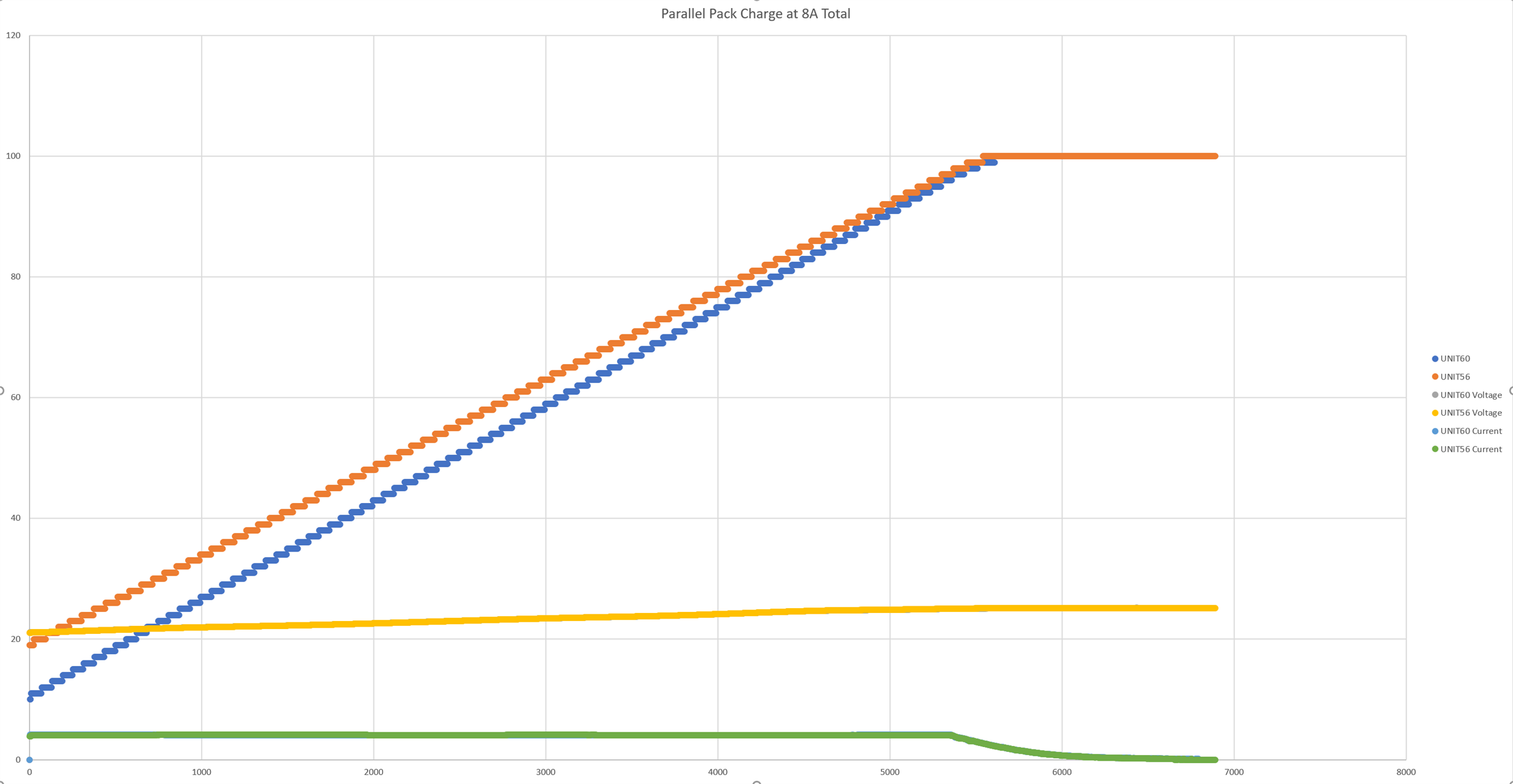

Overall this seems to work fairly well, and I get some pretty good graphs showing SoC decreasing properly, but sometimes after sitting a while packs drift in SoC to values that don't make sense. After sitting for a while shouldn't the gauge look at it's OCV, adjust the SoC a little bit and hold it at that value until voltage or current significantly change? Why would SoC continue to vary without discharging? When I charge or discharge them, they end up hitting 0% or 100% before end of charge/discharge sometimes as well. It is very frustrating for users to see packs at very different SoC values at the same voltage.

How many cycles should I do with newly produced packs to help them learn become more accurate? Is this required? How many cycles should I do with the pack that is used for the golden image after the learning cycle is completed? Will this help? Is the error probably just a matter of not doing enough cycles before creating a golden image?

Also, I have been watching the Ra Tables and they seem to vary quite a bit between packs. Is this normal? See the attached plot:

I could send my entire dataflash / parameter files to a TI expert if needed. I appreciate any feedback!