Part Number: BQ40Z50-R3-DEVICE-FW

Hi!

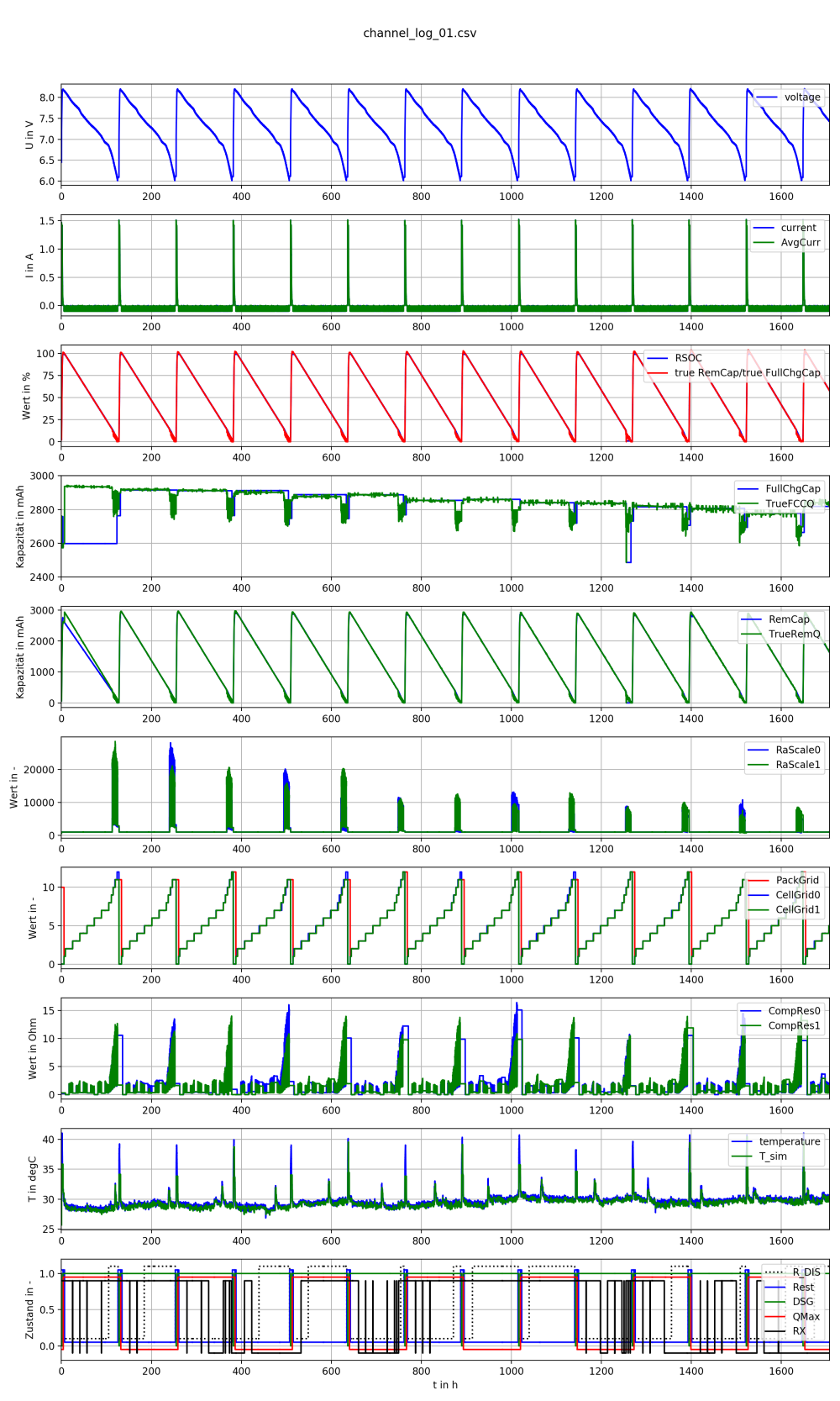

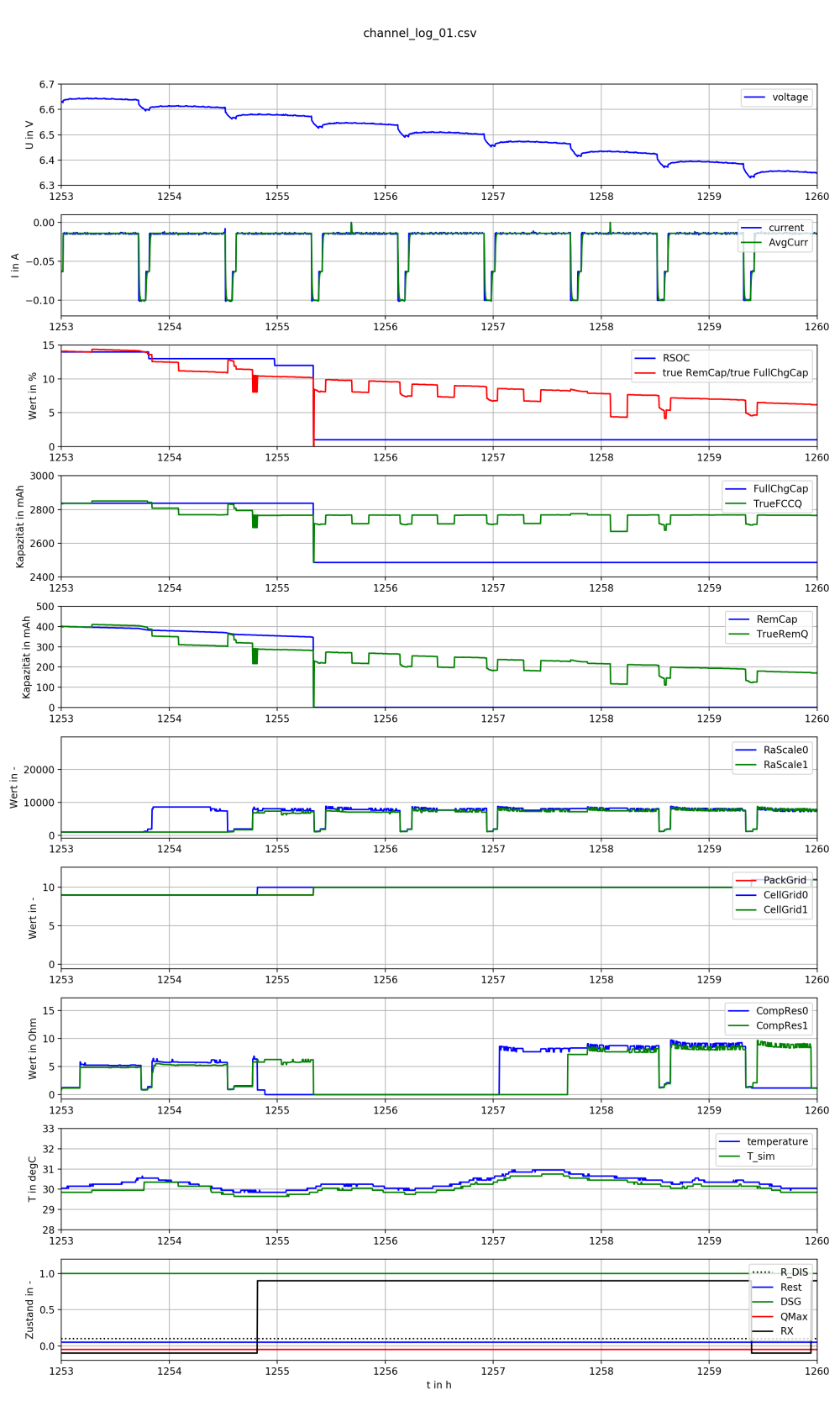

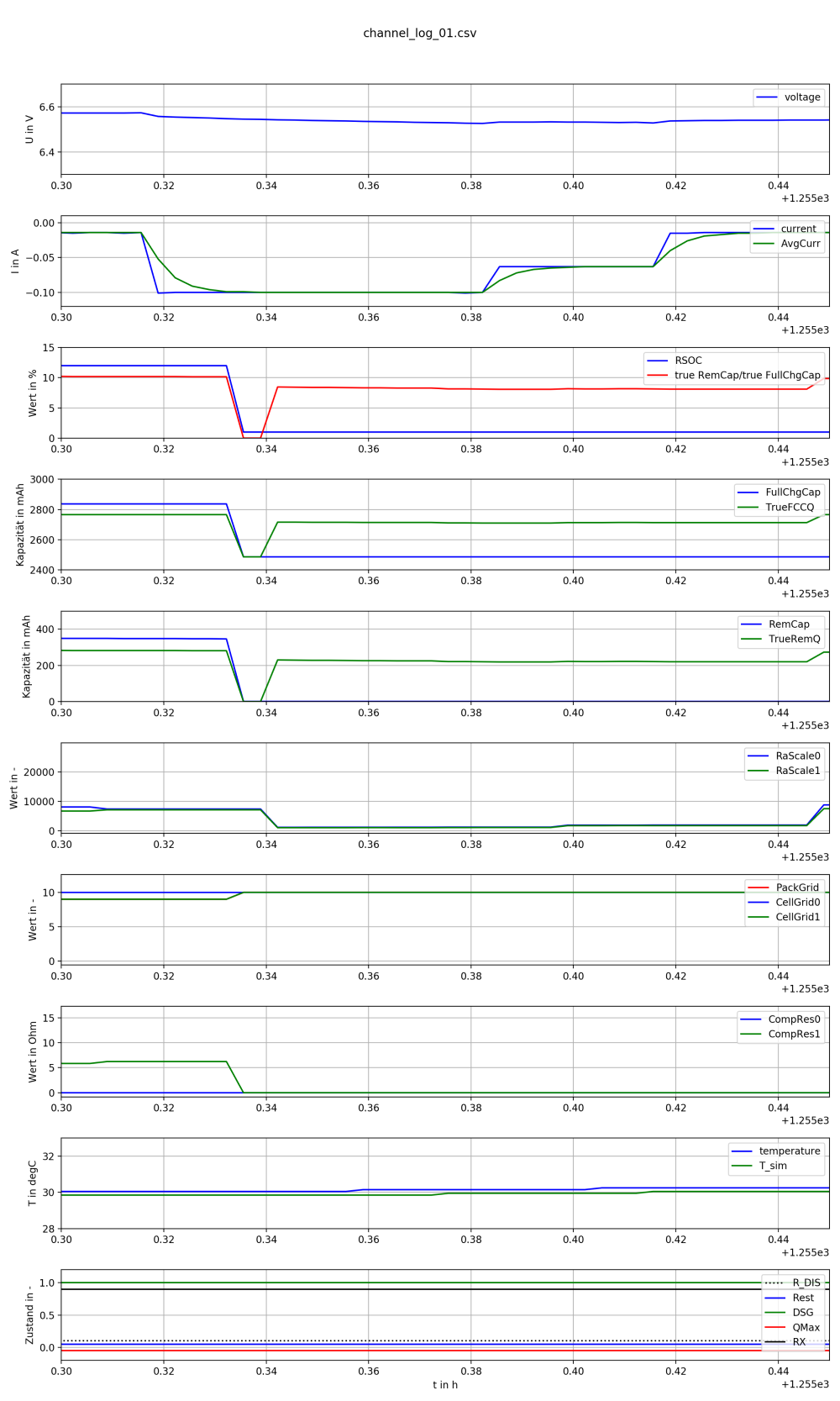

We are currently performing long-term tests with our battery pack (bq40z50-r3, 2 Li-Ion cells in series). In one cycle, we found that RSOC drops from 10% to 1% significantly too early and remains at 1% (see figures below). unfortunately this is an issue in our application..

In this context we have the following questions:

1) Why do the Remaining Capacity and RSOC drop so massively here?

2) Why does the True Remaining Capacity (TrueRemQ) recover, whereas the Remaining Capacity (RemCap) stays low? Smoothing is enabled.

3) What can we do about this behavior (e.g. configuration change)?

Best regards

Bernhard