Tool/software:

Hi.

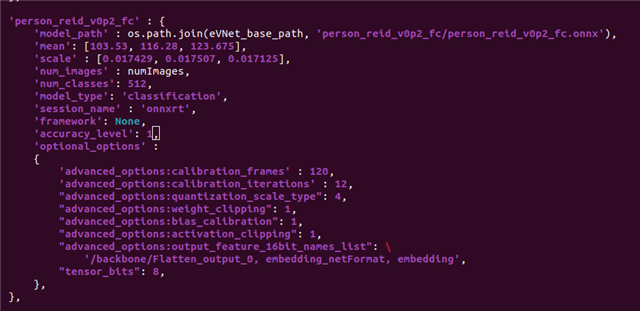

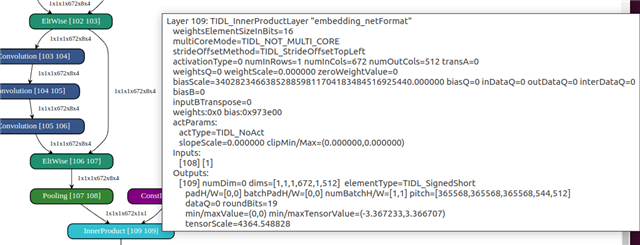

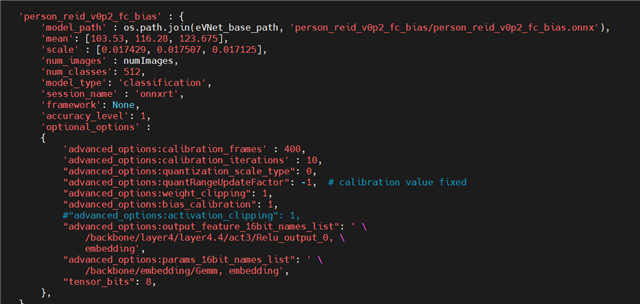

I trained a recognition model for embedding extraction and performed quantization, but in the Inner Product layer the biasScale jumps to the FP32 representable maximum. The backbone is RegNetX-1200MF, and ONNX inference works fine, but I keep encountering issues during quantization. I’m using SDK 9.2, and I set features_bit to 16 for the embedding extraction layer. What could be the problem?