I'm using the C6678 as a root complex. The DSP and a FPGA are connected to a PCIe switch. The FPGA is writing to DSP memory (posted writes) and I am trying to optimize performance.

The document SPRABK8 suggests, that the payload size can be increased from 128 bytes to 256 bytes for inbound transfers, which reduces the overhead.

The problem is, that the transmission gets slower if the the Maximum Payload Size value in the Device Capabilities Register is increased to 256 bytes without actually increasing the payload size. The performance further decreases if the payload size itself is increased.

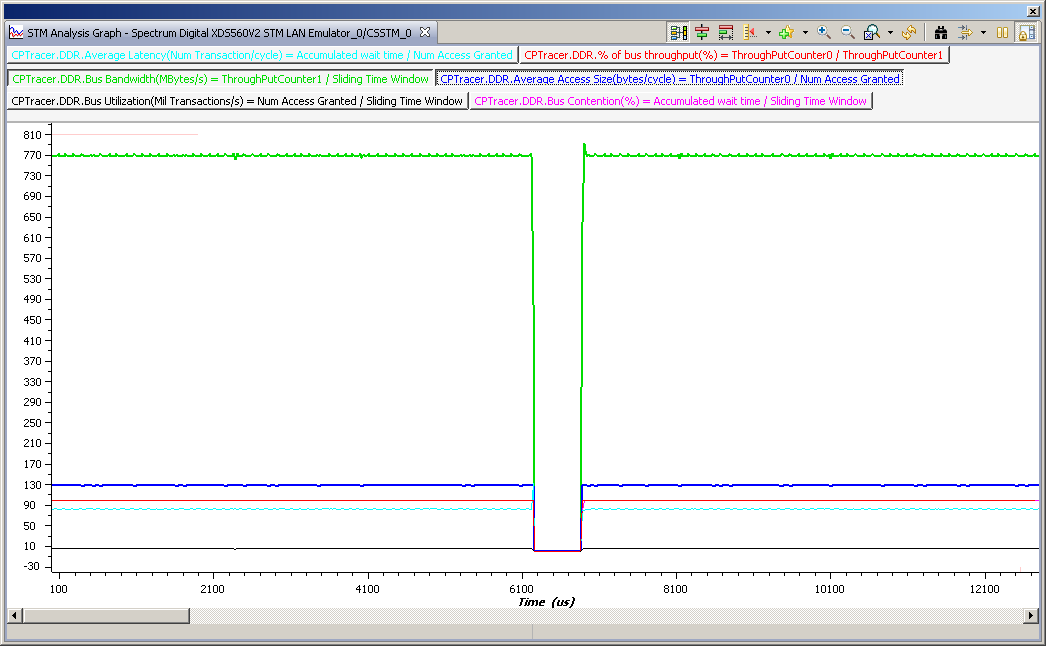

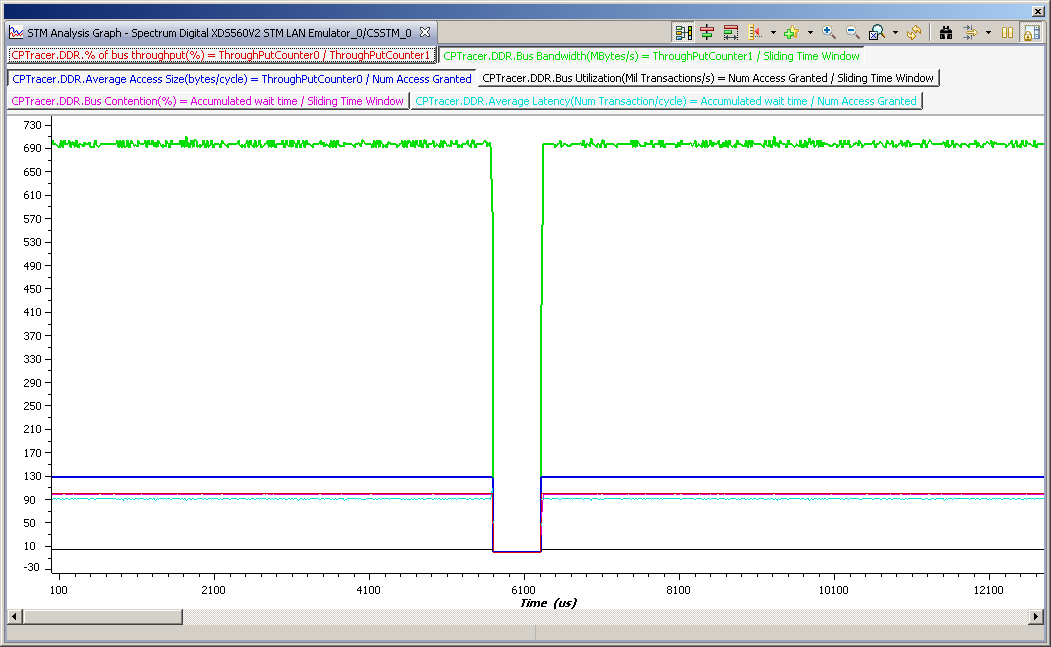

These are the results:

| Maximum payload size (Device capabilities) |

Used payload size | Data rate |

| 128 | 64 | 717 MB/s |

| 128 | 128 | 780 MB/s |

| 256 | 64 | 717 MB/s |

| 256 | 128 | 695 MB/s |

| 256 | 256 | 530 MB/s |

Any ideas?

Thanks,

Ralf