Other Parts Discussed in Thread: TMS320C6678

Tool/software: TI C/C++ Compiler

hi All,

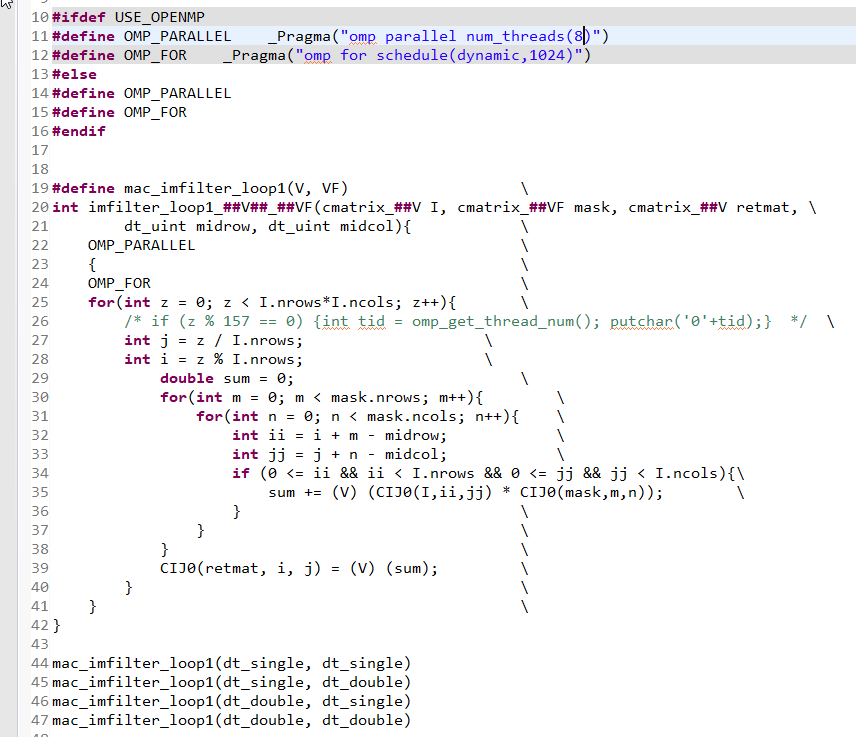

I am applying OpenMP to my code, and result wise it works just fine. However, performance-wise it much slower. When I profiled the clock count for different parts of the code, I noticed that Memmory_alloc and Memmory_free takes too many cycles compared to when I run the app in serial mode. ( 2.6e9 in OpenMP mode vs .25e9 in Serial mode)

The above, made me into thinking, whether I am doing heap management correctly???

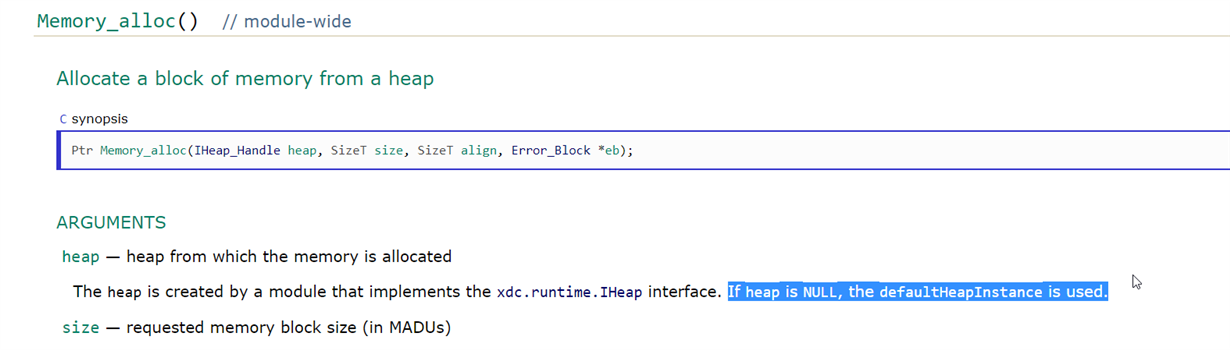

For one thing, I use null as the first argument of the Memory_alloc and Memory_free which I BELIEVE it uses the default system heap as per below link snapshot.

However, I came across Heap Management API where it talks about Heap management on DDR3 and MSMC. So, I am thinking maybe I get a better performance if I use this api instead ... if read the main purpose of this api it mentions that it is meant to avoid cache inconsistency .... but apparently I don't experience such issue because the code runs fine (or maybe it was just good luck so far);

Therefore, I seem to have two questions:

1) Why do I experience a slow execution in OpenMP mode on DSP but the code run faster on my PC when OpenMP enabled?

2) Whether using OpenMP Heap Management API would potentially speed up the code ? Or it is just meant to guarantee cache consistency ?

3) If I use OpenMP Heap Management API, would that mean memory allocations/frees would be thread safe such that I don't need use "omp critical" directive ???

I guess I need to better understand the main purpose of "OpenMP Heap Management API", apologies if questions sounds stupid ...

Please let me know if you need more details to answer my questions.

Regards,