Hi,

Our company has tested multiple configurations over the course of the last few months with the People Counting 3D demo (wall-mounted). Nonetheless, we consistently see a difference in the measured position that is output from the sensor's tracking module when comparing the true position vs the measured one when facing towards the sensor and facing away from the sensor.

We believe the error in the tracking should come from the Kalman Filter prediction. However, the measured velocity seems to be within the same range when facing towards or away from the sensor, yet the output position shows different behaviors.

Question:

1. What is causing major differences in the tracker behavior when falling towards and away from the sensor

2. How can we tune the sensor configuration in order to have a precise and exact measurement in the tracking position when falling in all directions/orientations

References:

[1] Detection Layer Tuning Guide: https://dev.ti.com/tirex/explore/node?node=AEdNr6XV-uJkInkTP0HOAw__VLyFKFf__LATEST

[2] Tracker Layer Tuning Guide: https://dev.ti.com/tirex/explore/node?node=AHZY4H1u04B21l9zTRElvQ__VLyFKFf__LATEST

[3] Tracking radar targets with multiple reflective points: https://dev.ti.com/tirex/explore/node?node=AM.QUGqhwdBqRvUeI98JuA__VLyFKFf__LATEST

Test Setup:

Human subject. Male. 1.75m. standard size.

The subject is standing and falling either facing towards or away from the sensor. Fall area is around 3-4m away from the sensor, on the same axis, as shown below:

Test Results:

1. 3D People Counting default configuration

The first test sequence has been done with the default configuration that comes with the 3D People Counting demo (wall-mounted). We decided to modify the following parameters as it would simplify the test setup and should have no impact on the test results:

frameCfg --> 100 ms refresh rate instead of 55ms

stateparam, boundaryboxes, allocation --> shouldn't have any impact

trackingCfg --> limited to 1 target

Complete configuration:

"dfeDataOutputMode": "1",

"channelCfg": "15 7 0",

"adcCfg": "2 1",

"adcbufCfg": "-1 0 1 1 1",

"lowPower": "0 0",

"profileCfg": "0 60.75 30.00 25.00 59.10 394758 0 54.71 1 96 2950.00 2 1 36",

"chirpCfg0": "0 0 0 0 0 0 0 1",

"chirpCfg1": "1 1 0 0 0 0 0 2",

"chirpCfg2": "2 2 0 0 0 0 0 4",

"frameCfg": "0 2 96 0 100 1 0",

"dynamicRACfarCfg": "-1 4 4 2 2 8 12 4 12 5.00 8.00 0.40 1 1",

"staticRACfarCfg": "-1 6 2 2 2 8 8 6 4 8.00 15.00 0.30 0 0",

"dynamicRangeAngleCfg": "-1 0.75 0.0010 1 0",

"dynamic2DAngleCfg": "-1 3.0 0.0300 1 0 1 0.30 0.85 8.00",

"staticRangeAngleCfg": "-1 0 8 8",

"antGeometry0": "-1 -1 0 0 -3 -3 -2 -2 -1 -1 0 0",

"antGeometry1": "-1 0 -1 0 -3 -2 -3 -2 -3 -2 -3 -2",

"antPhaseRot": "1 -1 1 -1 1 -1 1 -1 1 -1 1 -1",

"fovCfg": "-1 70.0 70.0",

"compRangeBiasAndRxChanPhase": "0 1 0 1 0 1 0 1 0 1 0 1 0 1 0 1 0 1 0 1 0 1 0 1 0",

"staticBoundaryBox": "-3.5 1.78 1.5 6.50 -2.40 1",

"boundaryBox": "-3.5 1.78 1.5 6.50 -2.40 1",

"gatingParam": "3.3 2 2 2 10",

"stateParam": "9 3 600 600 8 6000",

"allocationParam": "19 43 0.01 20 1.5 15",

"maxAcceleration": "0.1 0.1 0.1",

"trackingCfg": "1 2 800 1 46 96 100",

"presenceBoundaryBox": "-4 4 0.5 6 0 3",

"sensorStop": "0",

"flushCfg": "0",

"sensorStart": "0",

"sensorPosition": "0 0 0"

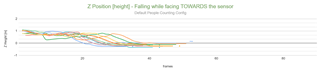

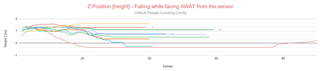

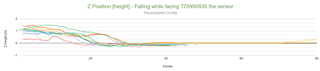

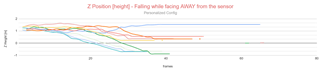

The following graphs show a clear distinction between the falling event when occurring towards the sensor and away from the sensor. When facing towards the sensor, all results (12/12) have the same outcome: the centroid's height goes from 1.0m to under 0m. The "landing" height (after the fall) is always between the range of [-0.2m ; 0m], which is really precise. When facing AWAY from the sensor, most of the results show that the falling event has not been tracked properly, where the "landing" Z final positions are between the range of [-0.35m ; 1.6m]

When switching the mattress so it becomes perpendicular to the sensor (rotating it of 90 degrees), the results are the same as facing TOWARDS the radar, which makes us believe this has something to do with the range detection and maybe the Doppler effect. When looking at the Kalman filter formula [3], we know that the centroid's velocity is taken into account:

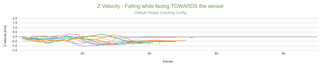

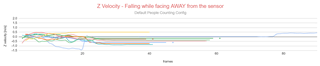

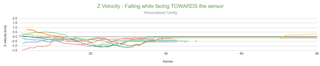

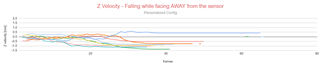

We suspected at first that falling in a different direction could cause a different measurement on the radial velocities, which would cause a different computation for the centroid's Z speed, which would then cause a difference in the tracking computation and outcome. However, the velocity in the Z axis seems the be the same, as shown below:

2. Personalized configuration

After reading most of the documentation (see all references), we tried to tune the following parameters:

a. profileCfg

We tried to come with a profileCfg that would offer (theoretically) a better range resolution and a better velocity resolution. As shown, in the previous graph, the maximum velocity from a falling event doesn't go over 2 [m/s]

| Name | Start Freq | slope [MHz/us] | digOutSRate | num ADC | Max Unambiguous Range | Max Snr Range | Range Res | sweep_bandwidth | total_bandwidth | Max Unambiguous Velocity | Velocity Resolution |

| Personalized | 60 | 36.94 | 2052.27 | 128 | 7.50 | 16.47 | 0.0651 | 2303.95 | 2684.43 | 5.14 | 0.081 |

| Default People Counting | 60.75 | 54.71 | 2950 | 96 | 7.28 | 16.38 | 0.0842 | 1780.39 | 3148.14 | 4.51 | 0.093 |

b. dynamicRACfarCfg

Based on [2], the dynamicCFAR Range Threshold could impact the centroid's velocity computation if the threshold discards some points that have valuable measured info on the radial velocity. We tried to lower the range and elevation thresholds in order to include more points to the tracked target

"dynamicRACfarCfg" : "-1 4 4 2 2 8 12 4 8 4.00 6.00 0.40 1 1",

c. dynamic2DAngleCfg

Better resolution within the elevation axis

"-1 2 0.0300 1 0 1 0.30 0.85 8.00",

d. maxAcceleration

As mentioned in [2], the higher the max acceleration is set, the more the prediction relies on the measured events instead of the prediction

"maxAcceleration" : "0.5 0.5 0.5",

e. trackingCfg

Maximum velocity of the tracker is up and the velocity resolution has been set to the minimum

"trackingCfg" : "1 2 800 1 52 1 100",

Personalized Configuration:

"dfeDataOutputMode" : "1",

"channelCfg" : "15 7 0",

"adcCfg" : "2 1",

"adcbufCfg" : "-1 0 1 1 1",

"lowPower" : "0 0",

"profileCfg" : "0 60.00 30.00 10.3 74.17 592137 0 36.94 1 128 2052.27 2 1 36",

"chirpCfg0" : "0 0 0 0 0 0 0 1",

"chirpCfg1" : "1 1 0 0 0 0 0 2",

"chirpCfg2" : "2 2 0 0 0 0 0 4",

"frameCfg" : "0 2 96 0 100 1 0",

"dynamicRACfarCfg" : "-1 4 4 2 2 8 12 4 8 4.00 6.00 0.40 1 1",

"staticRACfarCfg" : "-1 6 2 2 2 8 8 6 4 8.00 15.00 0.30 0 0",

"dynamicRangeAngleCfg" : "-1 0.75 0.0010 1 0",

"dynamic2DAngleCfg" : "-1 2 0.0300 1 0 1 0.30 0.85 8.00",

"staticRangeAngleCfg" : "-1 0 8 8",

"antGeometry0" : "-1 -1 0 0 -3 -3 -2 -2 -1 -1 0 0",

"antGeometry1" : "-1 0 -1 0 -3 -2 -3 -2 -3 -2 -3 -2",

"antPhaseRot" : "1 -1 1 -1 1 -1 1 -1 1 -1 1 -1",

"fovCfg" : "-1 60.0 60.0",

"compRangeBiasAndRxChanPhase" : "0 1 0 1 0 1 0 1 0 1 0 1 0 1 0 1 0 1 0 1 0 1 0 1 0",

"staticBoundaryBox" : "-3.5 1.78 1.5 6.50 -2.40 1",

"boundaryBox" : "-3.5 1.78 1.5 6.50 -2.40 1",

"gatingParam" : "3.3 2 2 2 10",

"stateParam" : "9 3 600 600 8 6000",

"allocationParam" : "19 43 0.01 20 1.5 15",

"maxAcceleration" : "0.5 0.5 0.5",

"trackingCfg" : "1 2 800 3 52 1 100",

"presenceBoundaryBox" : "-4 4 0.5 6 0 3",

"sensorStop" : "0",

"flushCfg" : "0",

"sensorStart" : "0",

"sensorPosition" : "0 0 0"

The results are the same:

---

I am aware this has been a long post, but our company is really looking for any help on how to solve this issue and more importantly, what exactly is causing the discrepancies

Thank you and regards,

Justin