Hello TI-Team,

I am trying to understand the relation between the chirp parameters and the high level parameters such as v_res, v_max etc with your Programming Chirp Parameters Guide. And since I need to measure the Doppler velocity as accurate as possible, I noticed that the velocity resolution is being rounded up in the demo visualizer. This can be seen in the comments of the auto-generated config files. And in the radar output I noticed a smaller v_res, that is oscillating ones the velocity is above a certain value.

For example:

calculating v_res with the following formula

and the parameters: lambda = c0/f, f = 60e9, N = 384 and T_c = 4.3e-05, while T_c is T_c = T_idle + T_ramp_end.

I get approximately v_res = 0.1513 m/s and v_max = 9.683 m/s,

while in the auto-generated config file from demo-visualizer it is v_res = 0.16 and v_max = 10.24.

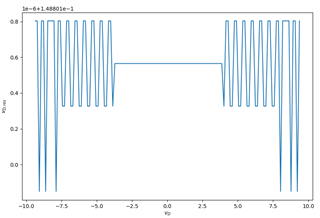

Radar output (with Out of box demo):

In the radar data I observe a variable v_step size, different from the v_res config file and the calculated value. I measure values like these: 0.1488018035888672, 0.14880084991455078, 0.14880132675170898, 0.14880156517028809 [m/s]

And a maximum v_max = 9.37449836730957 m/s

I found that the v_res is oscillating ones the velocity reaches > 3.8 m/s

If I use f=62 GHz for the above calculation I get v_res = 0.1464 m/s and v_max = 9.3709, which is closer to the measured v_res.

But why is it oscillating? And how is the v_res being exactly calculated?

I know that lambda is varying due to the frequency sweep, could that be the reason? If yes, how did you decide the frequency / lambda.

Or is this oscillation a machine precision error?

Best regards,

Raschid