I have a question about the behavior of the OPT3101 measurement.

When I start measurement, I write the necessary settings to the registers and enable TG_EN and EN_TX_CLKB.

It may take a little time from the start of measurement until data ready, but there is a difference between the behavior when the data rate is set to 10Hz (NUM_SUB_FRAMES=399) and 100Hz (NUM_SUB_FRAMES=39). In the case of 100Hz, it takes about 0.9 seconds more than in the case of 10Hz from the starting trigger until the data is ready.

For example, if you measure for 10 seconds at 100 Hz, you will lose 0.9 seconds of data and receive only 910 data when you expected to receive 1,000 data. But if you measure for 10 seconds at 10 Hz, you will receive 100 data which is exactly the expected amount. Of course, the timing of the start of the measurement may need to be discussed, but before we get there, it would be appreciated if you could give me some idea or explanation of this phenomenon.

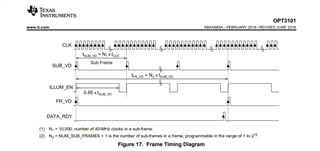

Data ready trigger timing, datasheet P.15