Hi,

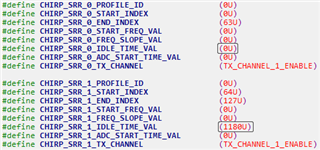

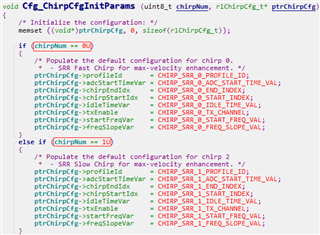

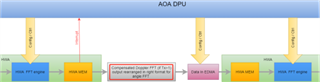

I am using the MMW demo in SDK 5.3, and I want to use the velocity disambiguate algorithm in the SRR demo, which requires configuring two different chirps.

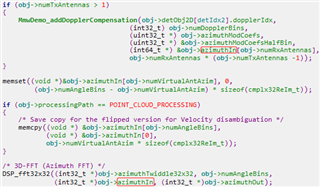

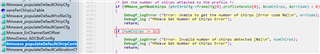

I checked the code in the SDK (common_full.c), which limits the number of profiles and chirps to only 1, which means I can't configure another chirp for velocity disambiguate.

Does the current SDK have a way to meet this requirement?

Thanks!