Hello,

I was wondering if there's a standard formula for calculating the chirp time. I've gone through the document titled "Programming Chirp Parameters in TI Radar Devices," but I'm still unsure about how to calculate the chirp time. I have used the below parameters to set up the awr1642boost evm for data capture. Could you please provide some guidance on how to calculate the chirp time using the parameters below?

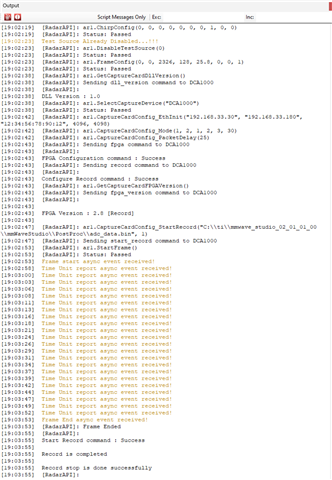

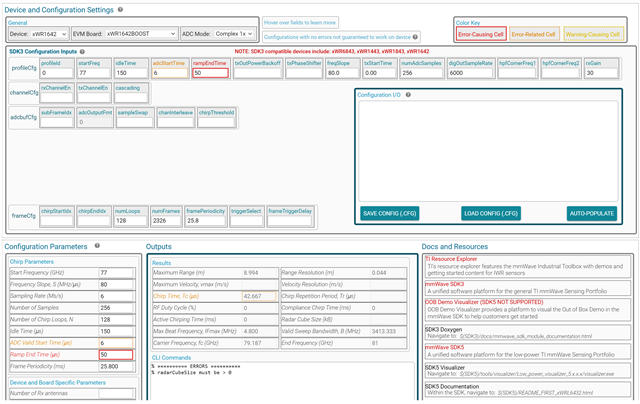

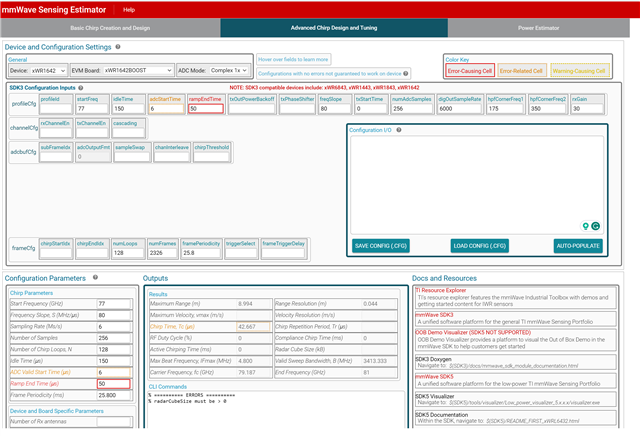

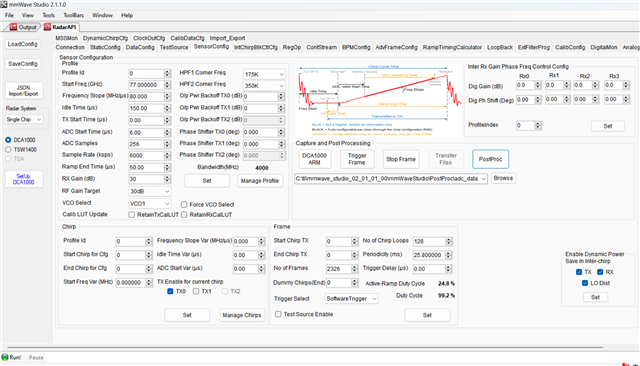

Number of frames (n_frame) = 2326

Chirps per frame (chirp_per_frame) = 128

Frame periodicity (frame_periodicity) = 25.8 milliseconds

Wait before copy (wait_before_copy) = 70000

Additional parameters:

Starting frequency (start_freq) = 77 GHz

Idle time (idle_time) = 150 microseconds

ADC start time (adc_start_time) = 6 microseconds

Ramp end time (ramp_end_time) = 50 microseconds

Frequency slope (freq_slope) = 80

ADC samples (adc_samples) = 256

ADC sample rate (adc_sample_rate) = 6000

RX gain (RX_gain) = 30

Thank you,

Deepu