Hi,

I am using IWR6843ISK-ODS in ros2 Ubuntu22.04LTS with small obstacle detection binary file for extrinsic radar-camera calibration.

I am using a corner reflector placed at different positions and then checking the value of point from rviz2 using "Publish point" option.

In ROS, the coordinates are x : forward +ve, y : left +ve, z : above +ve but I observed a discrepancy with the values I obtained during calibration.

I have the setup in where sensors point to ground and corner reflector is placed on ground.

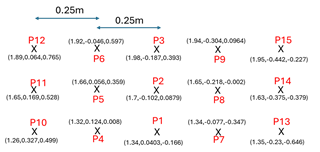

And the position of CR is as below along with the coordinates obtained from "Publish point" option:

Here, all the adjacent points are separated by 0.25m in lateral direction. I have also mentioned the x,y,z points obtained from publish point in the image.

The difference between the y value of P12 and P15 shall be close to 1m but it's 0.5m whereas the difference of z value is close to 1m i.e. 0.992m. This is same for pairs P11&P14, P10&P13 and likewise for other points as well.

My cfg file has correct compRangeBiasAndRxChanPhase as per ODS antenna and repeated the process another time but got the same results.

Also, I have not made any changes to file DataHandlerClass.cpp under READ-OBJ_STRUCT.

Could you please tell on the basis of above data if the y & z values to be swapped? Has there been any such discrepancy in coordinate frame?

Best regards,

Pushkar