Hello,

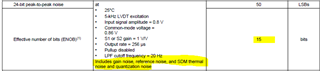

When trying to calculate signal error and resolution for the PGA970 one eventually ends up at section 6.14 of the datasheet: Digital Demodulators 1 and 2. Here I notice the ENOB value of 15 bits.

This is followed by the usual suspects that can be converted to LSB values (FSO, ppm/C, PSRR, etc).

1. My system needs to have a minimum resolution of 820 uV if I am running the LVDT at it's spec'd values. The demodulator max voltage is 2.5 V. So, using the ENOB I get the following, 2.5/2**(15+1) = ~ 38.1 uV resolution without adding in the other aforementioned non-idealities (FSO, ppm/C, PSRR). Does this calculation seem correct for the actual resolution this thing has?

2. Are there other sources of signal error in this chip beyond what is stated in the electrical characteristics for the Digital Demodulators 1 and 2 - in terms of the ENOB value that is given and all that it encompasses?

I will have to add external amplification just like the EVAL board has in order to hit the 2.3Vrms 5kHz primary excitation voltage the LVDT was spec'd at in order to have a 0.044V/mm/Vex sensitivity and 20um resolution I desire, and that signal will need to still be attenuated down for the PGA970 to accept it I gathered. This will definitely introduce more error I will have to account for somehow. Any insight into this setup would be appreciated but I am mainly concerned with question 1.