Tool/software:

Hi team,

I tested Human/Non-human classification lab with xwrl6432BOOST EVM from the radar_toolbox_2_10_00_04. The radar sensor (xwrl6432 BOOST EVM) is mounted at 1.7m height with 10 degree tilt downwards.

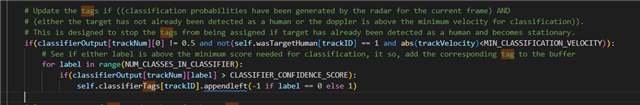

Case 1: Radar detects the table fan as Non-human initially which is placed 1m apart, but when the human crosses beside the table fan, the label to fan changes from Non-human to human and persists as human until sensor/table fan turned off.

Can you please explain the behavior of table fan detection in the above scenario.

Case 2: If we move any object (Box) with uneven doppler infront of radar sensor, it detects and labeled as human. Please explain the classification behavior in this case.

Thanks in advance