Tool/software:

Hardware:

IWRL6432BOOST

Software:

video_doorbell_demo.Release.appimage on radar_toolbox_2_10_00_04

Industrial_Visualizer.exe

We want to evaluate human vs non-human classification based on machine learning for video doorbell demo. We have flashed above prebuilt firmware on radar_toolbox_2_10_00_04 into IWRL6432BOOST board and used following modified chirp configuration:

% ***************************************************************

% long_range_state_machine.cfge: Used to detect the presence of humans

% in outdoor environments, specifically for video doorbells. Detects

% movement through the point cloud, which is fed into a state machine

% to increase detection robustness.

% ***************************************************************

sensorStop 0

channelCfg 7 3 0

chirpComnCfg 23 0 0 256 4 68 0

chirpTimingCfg 9.9 24 0 12.5 62

frameCfg 2 0 280 8 250 0

antGeometryCfg 0 1 1 2 0 3 0 0 1 1 0 2 2.418 2.418

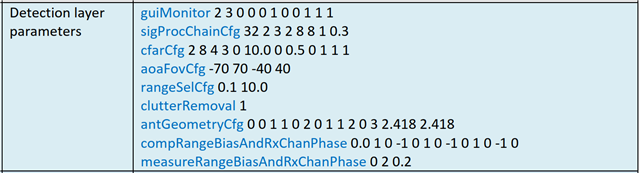

guiMonitor 2 0 0 0 0 1 1 0 1 1 1

sigProcChainCfg 64 2 1 1 0 0 0 15

cfarCfg 2 8 4 3 0 12.0 0 0.5 0 1 1 1

aoaFovCfg -80 80 -40 40

rangeSelCfg 0.1 8.0

clutterRemoval 1

compRangeBiasAndRxChanPhase 0.0 1.00000 0.00000 -1.00000 0.00000 1.00000 0.00000 -1.00000 0.00000 1.00000 0.00000 -1.00000 0.00000

adcDataSource 0 adc_data_0001_CtestAdc6Ant.bin

adcLogging 0

lowPowerCfg 1

factoryCalibCfg 1 0 40 0 0x1ff000

% Motion/Presence Detection Layer Parameters

mpdBoundaryArc 1 0.5 5 -30 30 0.5 2

mpdBoundaryArc 2 0.5 3 -70 -31 0.5 2

mpdBoundaryArc 3 0.5 3 31 70 0.5 2

stateParam 3 3 12 50 5 200

majorStateCfg 8 6 60 20 15 150 4 4

clusterCfg 1 0.5 2

% Tracking Layer Parameters

sensorPosition 0 0 1.2 0 0

gatingParam 3 2 2 2 4

allocationParam 6 10 0.1 4 0.5 20

maxAcceleration 0.4 0.4 0.1

trackingCfg 1 2 100 3 61.4 191.8 100

presenceBoundaryBox -3 3 0.5 7.5 0 3

% Classification Layer Parameters

microDopplerCfg 1 0 0.5 0 1 1 12.5 87.5 1

classifierCfg 1 3 4

rangeSNRCompensation 1 12 6 5 12

presenceGPIO 1

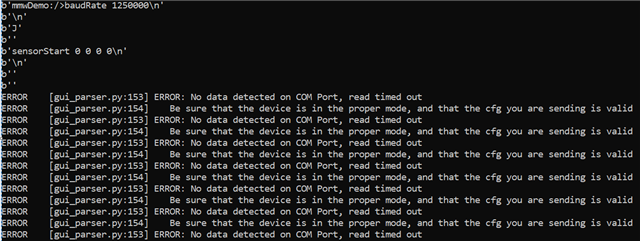

% baudRate 1250000

baudRate 115200

sensorStart 0 0 0 0

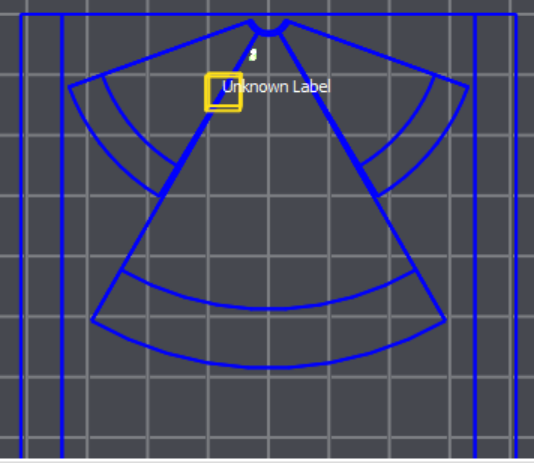

When we have conducted video doorbell experiment, we found there were no bound boxes on human and non-human object(maybe first appear but then disappear) and the label is always unknown label. We can't classify human and non-human object. Could you give us suggestions for performing human vs non-human classification on video doorbell demo based on machine learning approach? Thank you very much.