Tool/software:

Hello,

We would like to incorporate the recommended operation method for Self-Calibration and minimize the variation in target signal strength due to temperature changes.

Specifically, our current radar system performs all Self-Calibration in the field.

The document for Self-Calibration (SPRACF4C) recommends using the values calibrated at the Factory for certain items in the Field.

We would like to incorporate this mechanism.

We have evaluated the Self-Calibration.

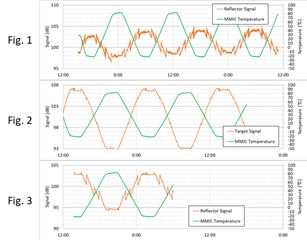

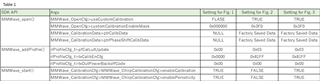

orange graphs of Fig. 1 to 3 show the results of continuously measuring the reflected signal intensity of the reflector when the radar and reflector were placed in a thermostatic chamber and the environmental temperature was varied from -40 deg C to +80 deg C. The settings for Fig. 1 to 3 are shown in Table 1.

Green graphs plot the average values of 10 internal temperature sensors in the MMIC.

(The experiments were conducted after confirming that no reflection from the thermostatic chamber at the same distance as the reflector was observed.)

The radar used in Fig. 1 to 3 is the same unit for all measurements.

Q1: In the previous thread (e2e.ti.com/.../), it was advised to turn off run-time calibration (MMWave_ChirpCalibrationCfg>enablePeriodicity=false). Is this advice appropriate for the stated purpose?

(Which setting between Fig. 1 and Fig. 2 is correct? If neither is recommended, please provide the correct settings.)

Q2: How much variation in signal intensity is expected due to temperature? Since the Rx Gain LUT is in 2dB increments, is there a way to limit the variation to around 2dB?

Best regards,

Yasuaki