Other Parts Discussed in Thread: IWR1443, IWR6843, IWR6843AOP, UNIFLASH

Tool/software:

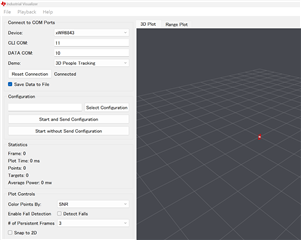

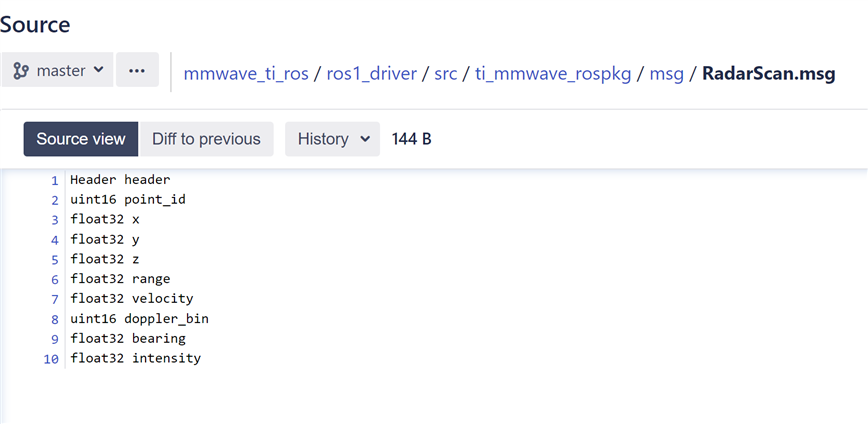

I would like to reproduce the demo video that is being conducted at the following link.

The video says to use a Robot OS compatible Linux PC, but is it possible to do the demo on a Windows PC?

Also, is there any other better way?

https://www.ti.com/zh-tw/video/5625804515001#transcript-tab