Other Parts Discussed in Thread: IWR1843

Tool/software:

Good morning!

I have been browsing the forums and toolboxes for a couple months now and I am afraid I am not sure where to start.

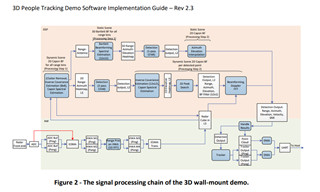

I am working on a Senior Design Capstone project for university and I am trying to implement facial/eye tracking on the above mentioned EVM (without using the DCA). I am trying to get access to dense point cloud information so that I can do a little more post processing on the integrated MCU to locate ears and nose as well as eyes and/or facial landmarks. I was trying to find some way to access this dense point cloud information on chip and just do the post processing there instead of taking it off chip because I don't have the budget for the DCA board as well. None of the examples I was able to find had available source code of any kind that I could find. So any help or direction would be greatly appreciated, I am fairly new to the TI MCU family as I've been on STM processors, so the tool chain and example projects are a touch convoluted and confusing so any links would be very helpful.

Examples I have tried finding source for:

People Detection and Vitals Monitoring