Tool/software:

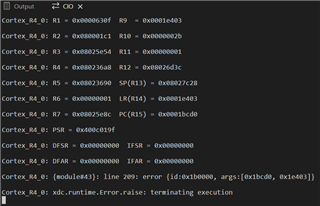

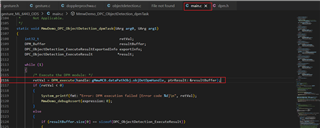

I changed RANGE_BIN_END to 20 so the device could capture features out to about 100 cm, but after flashing the firmware I’m unable to read any data from the data COM port.

I used JTAG for debugging, but I’ve run into an issue. Is there anything I might have overlooked, and how can I resolve it?