Hi Team/Expert,

I've written a fpga code for tdc7201, using combined operation with each TDCx working in measurement mode 1, while the frequency of external reference clock is 8MHz (Stability < +/-30ppm) and the frequency of SCLK is 15MHz generated by fpga.

Using fpga itself as the start-stop generator, I've taken some simulation tests to tdc7201 range from 100ns to 2us. It seems that every process goes right, but the result is not as good as expected.

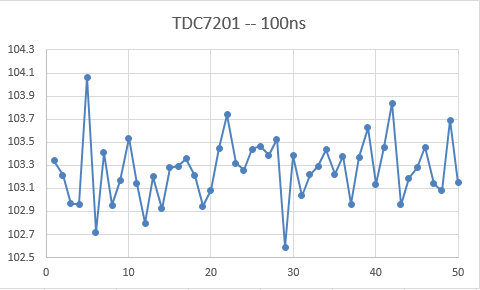

First the random error (peak-to-peak) is always 1.4ns ~ 2.5ns, which means 0.2m~0.4m distance error for LIDAR. Test result for 100ns is shown below as an instance, where the measurement runs for 50 times.

Since the standard deviation of tdc7201 is supposed to be 35ps, the measured random error (peak-to-peak) should not be so large I think, but I have no idea what's wrong here.

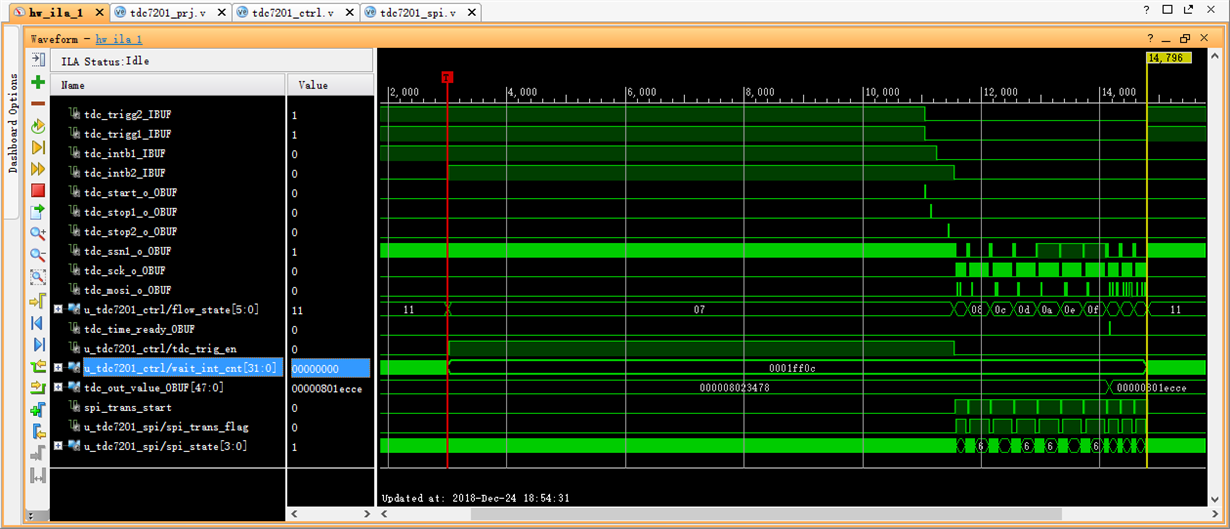

Second is that every time I clear the TDCx_INT_STATUS register bit NEW_MEAS_INT by writing a 1 to it through SPI, it takes me as long as 8.73ms to wait for both INTBx going back to high.

As is shown in the waveform below, I’ve built a counter named “wait_int_cnt” to measure this time and a large number 0x1ff0c was obtained, which means 8.73ms at 15MHz clock, while my program have done nothing but wait for both INTBx going back to high during this period.

It's another serious problem that because of such a long waiting time, the measurement rate of LIDAR is limited to less than 110Hz.

Is this waiting time of 8.73ms normal? Is there any checking or improvement advice?

Thanks a lot for your great support on this!

Best regards and happy new year,

Mark Lewis