Other Parts Discussed in Thread: TMP61, , PGA302

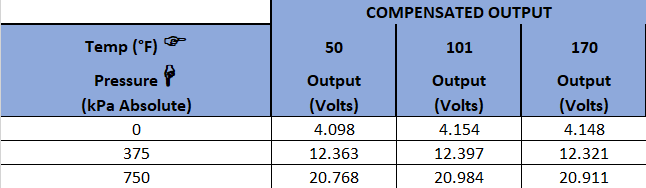

I have tried to temperature compensate a bonded foil strain gage sensor using the PGA305EVM-034 board. I am using the TMP61 thermistor in single ended mode with both the thermistor and sensor in the oven. The eval board is outside the oven.

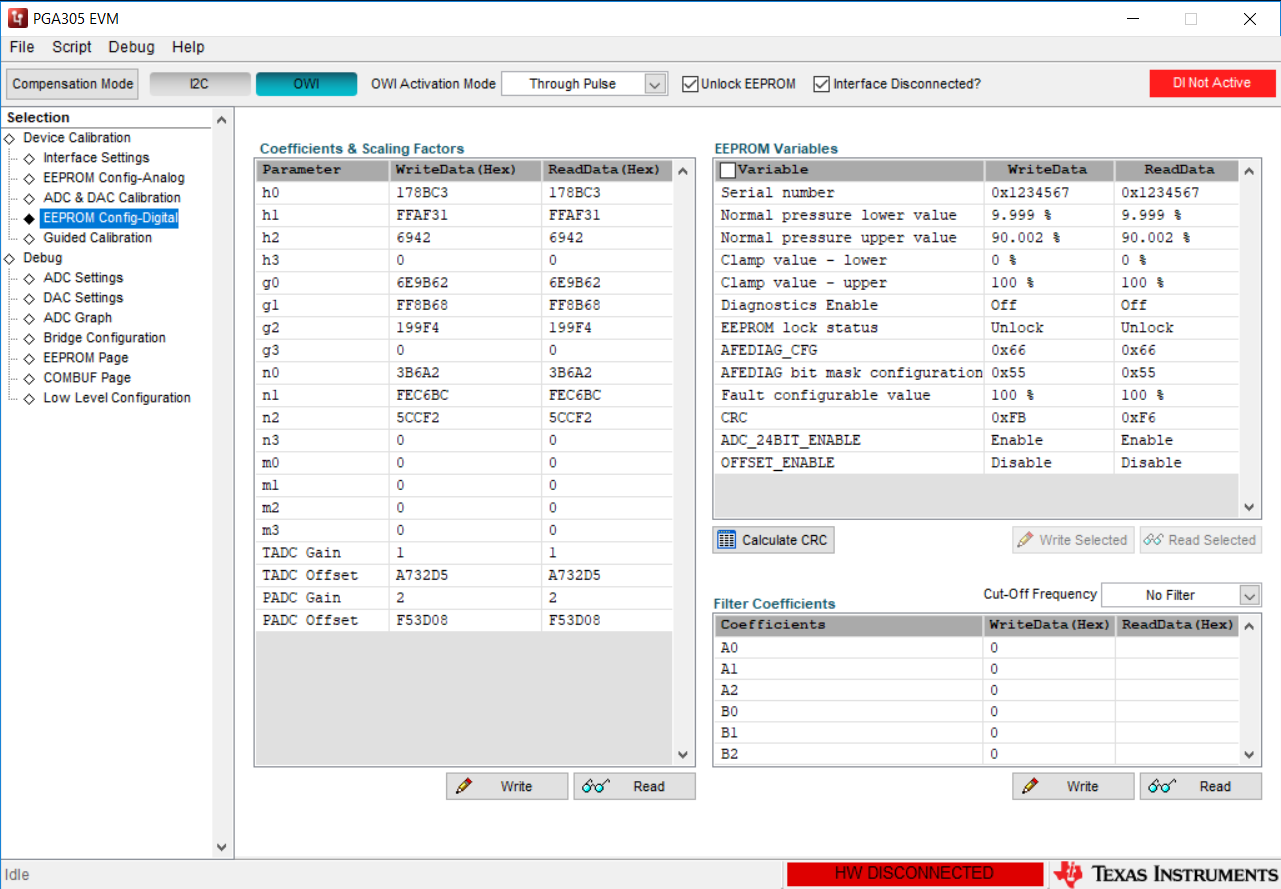

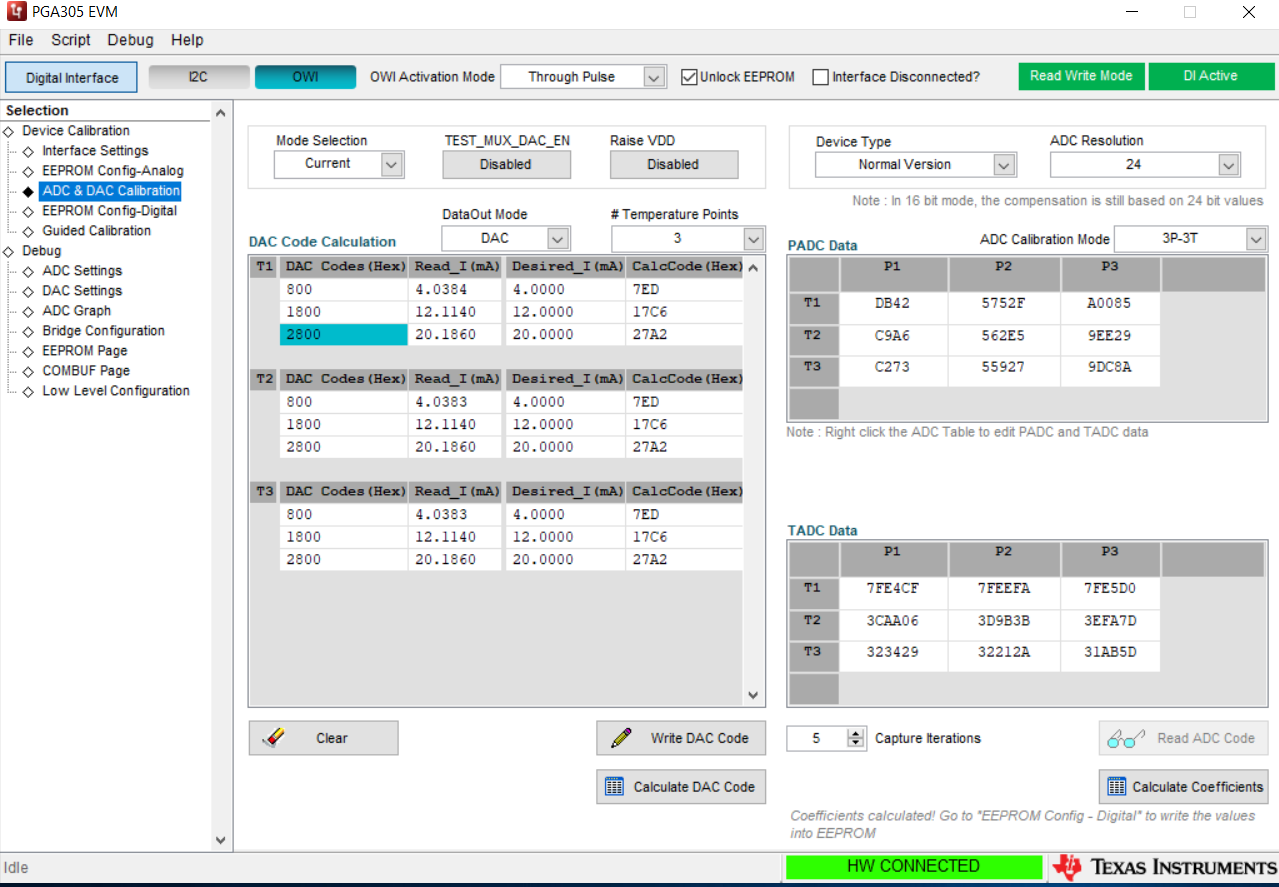

The data collected through temperature, as shown in the attachment, appears to be reasonable. However, after calculating the coefficients using the GUI and downloading to the chip, the full scale output is way off. A screen shot of the coefficients is also attached

Can you please tell me what I am doing incorrect?