Other Parts Discussed in Thread: IWR6843

Hello TI team,

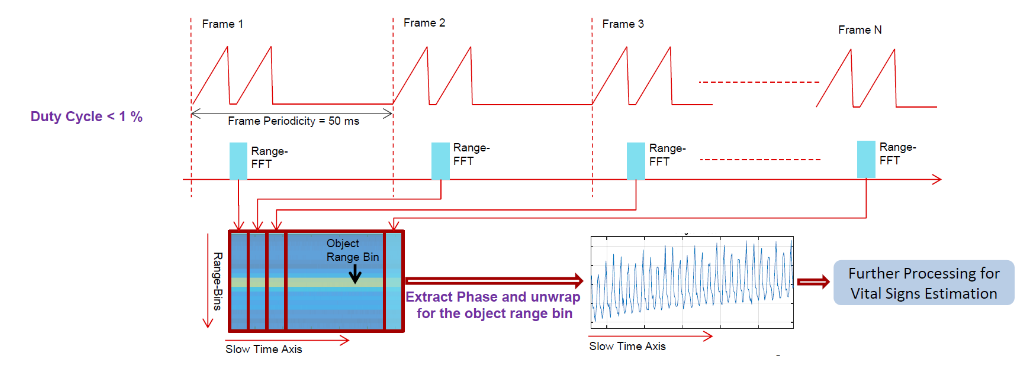

I've been looking into an idea of "slow-time" data processing. It is described in the vital signs lab, and here is the screenshot that explains it in a nutshell:

I'm interested in applying this principle but for point cloud data generation (not vital signs detection). I'd like to be able to detect a very slow movement, about 0.5-5 cm/sec and this approach seems potentially capable since it still allows to use higher-FPS chirp config. I have developed a somewhat working prototype in Matlab, and I'm using raw data captures that were recorded by using your board DCA1000. The chirp config that I used for data captures had framerate of 16 FPS.

My question is about maximum velocity for the slow-data-processing and whether there are ways to increase it for the slow-time processing. Currently, the slow-time algorithm follows:

- Sample 1st range-FFT for each frame - collect frames for 1000 msec, thus in total I have 16 range-FFTs and I treat this as a slow-time frame.

- Process the slow-time frames and extract necessary info (point cloud data in my case).

With this setup I am able to achieve maximum velocity of about 0.02 m/s.

It is calculated as follows: v_max = lambda / (4 * T_chirp), and T_chirp = 62.5 msec (timing between each consecutive frames).

In order to increase the max velocity value, at first I though I could just sample range-FFTs within fast frames, for example, take every 4th frame and add them all to stack - and do it during 1000 msec. But I was getting some strange-looking range-Doppler heatmaps with such arrangement. And then I realized that it is probably due to the fact that fast-times rangeFFTs are not distributed evenly, and that there is a silent time when DSP work is done. Something like this:

///////////////////--------DSP time-----///////////////////--------DSP time-----///////////////////--------DSP time-----///////////////////--------DSP time-----...

Could you confirm this, please?

So I cannot sample, let's say, every 4th range-FFT for 16 frames for slow-data processing, because then the idle time will not be even for my slow-time frames stack. The sampled range-FFTs would be separated by larger idle times, however I would like to be able to treat them as range-FFTs for one frame:

//// //// //// //// ...

I was wondering if you could provide any advise on this? Is there a way to compensate for the "DSP time" when I calculate the range-Doppler heatmap for slow-time processing when selecting every 4th range-FFT? Or is it impossible in theory and I can only get v_max=0.02m/s for 16 FPS with the slow-time data being sampled once per fast-time frame? I need to keep the original point cloud data DSP, so I cannot remove that part from the firmware.