Other Parts Discussed in Thread: IWR6843

Hi, I am trying to partially reproduce the autonomous robotics lab under industrial toolbox.

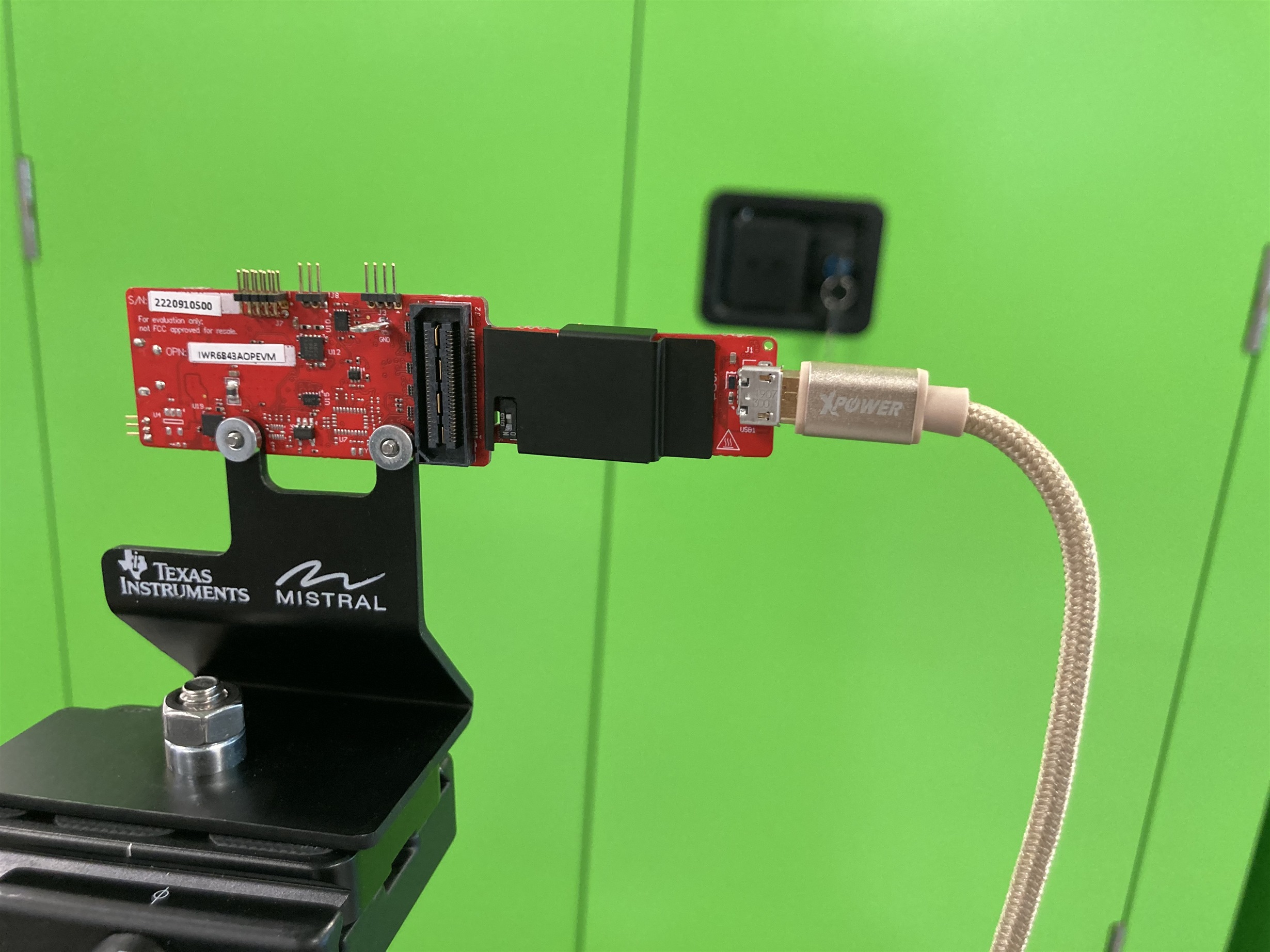

here is my setup:

using IWR6843AOPEVM, with the out-of-box demo. with the corresponding config loaded(copy from the autonomous robotics lab)

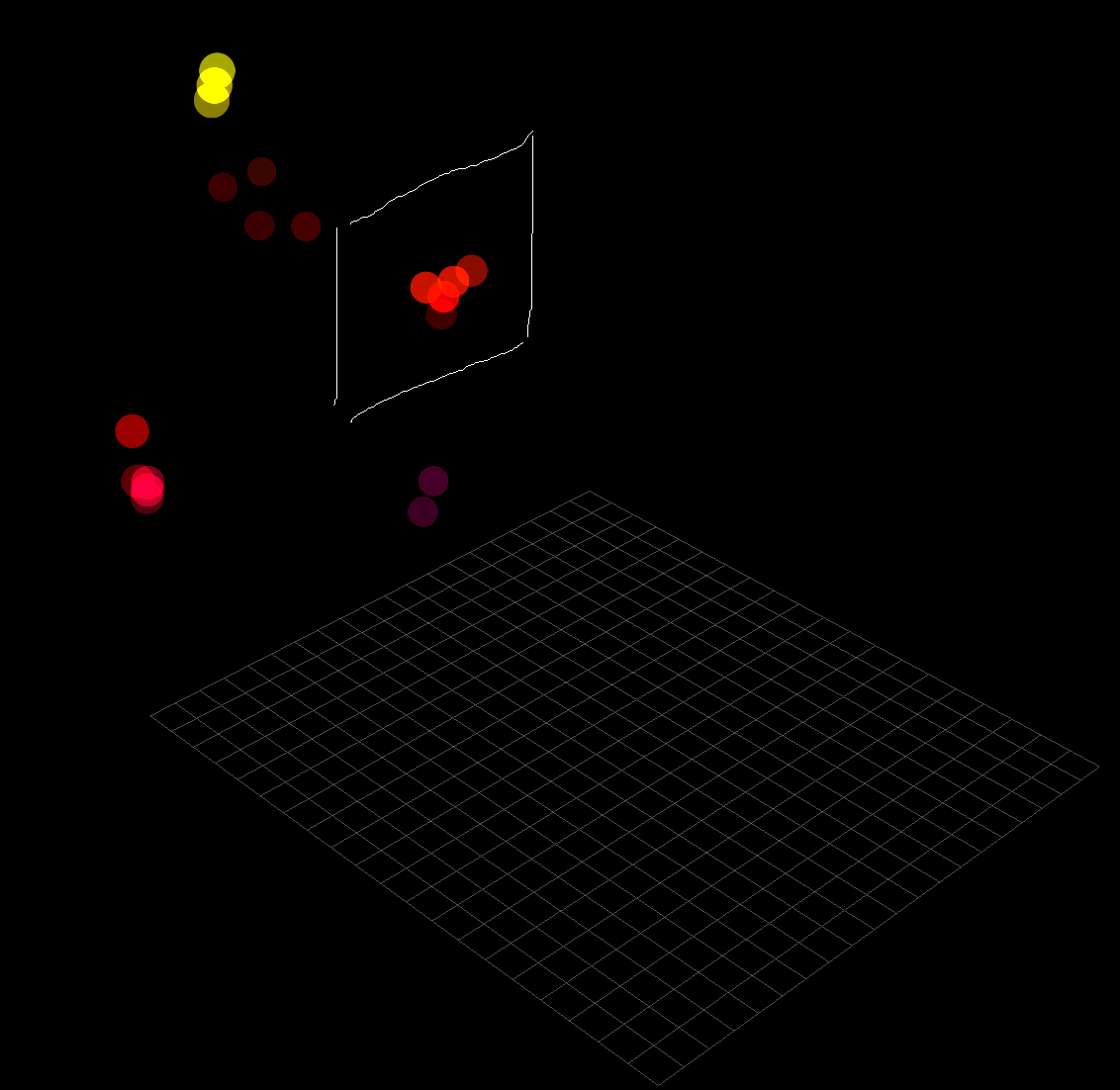

the experiment setup: pointing the radar towards a 2m x 1m metal surface. however, the detection result is not as good as expected. it only shows a few points (at least cannot tell it is a surface purely by looking at the points)

the hand-draw rectangle is the imaginary metal surface. and only a few points were inside the surface.

What can I do so that the detection points can be like a surface?

I have tried reduce the range direction CFAR threshold from 12dB to say 8dB. but that only give a few more points, still does not look a surface.