Other Parts Discussed in Thread: MMWAVEICBOOST, IWR6843

Hi,

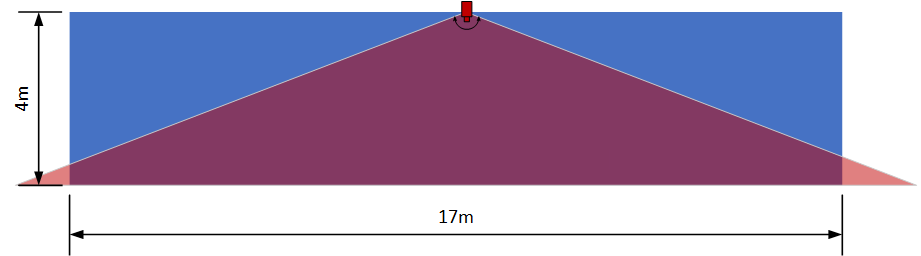

We are developing a security system and we are planning to integrate mmWave sensors, we just started to work or experiment with radar technology. The highest range we need for our application is 20m. Within this range we would like to detect humans, and track them accurately. Additionally, we are planning to detect static objects, like walls or parked vehicles, and to calculate the distance between humans and these static objects.

Ti's IWR6843 mmwave sensors seem to fit perfectly into our application. Therefore, we have several of your ISK, ODS, AoP and MMWAVEICBOOST devices here for testing purpose. We have gone through the labs in the Research explorer especially Area Scanner as well as People Counting are similar to our use case. Additionally, we started to take a look at the the code in CCS while studying the mmWave SDK Module Documentation. But somehow we are stuck in details concerning the flow. What is the proper approach to get rapidly started with the flow of the code? Is there a specific part of your integrated systems and code on which we got to focus? For us it would be easier to adopt all standard functions (target lists, gtrack etc.) and focus on using for our application, without analysing the whole code in detail. How do other developers get started with developing their own application? It would be great if you let us know the specific module on which we should focus and make changes so that we could work on our application with the available API and code.

Regards,

Divya