Dear All,

I am trying to run MELP code on DSK 6713 ( processing a buffer of recorded speech of about 6 seconds duration),

and I noticed something weird, that the codebook for vector quantization of e.g Fourier magnitudes in the code is as:

float fsvq_cb[2560]={

0.822998, 1.496297, 0.584847, 1.313507, 0.846008, ..... };

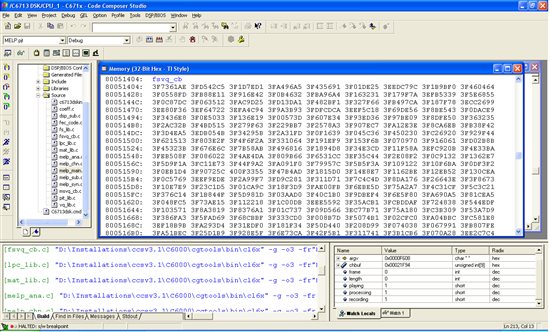

whereas when I view it in memory using 32-Bit Floating Point format it is as follows:

0.9507092

1.6661

0.6152163

1.285847

0.7630396

....

which are approximately close to each other, can you explain why this difference, because for other initialized variables such as window coefficients the numbers are the same in the code and the memory view.