Hi again,

To initialize per-core data structures, we currently have loops which are intended to be executed once by each core, and look like this:

int nOmpNumProcs = omp_get_num_procs();

#pragma omp parallel for schedule(static, 1) private(i)

for(i=0; i < nOmpNumProcs; i++)

{

printf("core %d was initialized", omp_get_thread_num());

fflush(stdout);

}

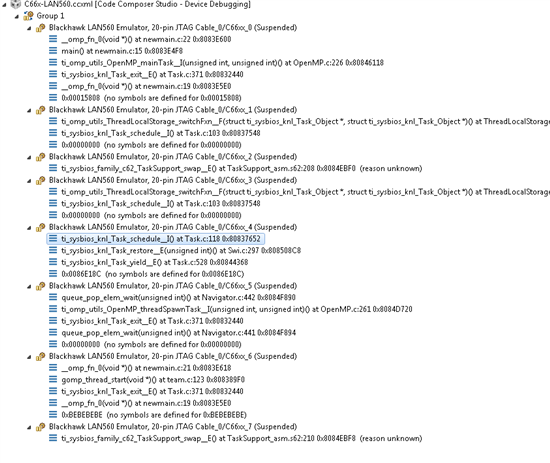

However, even with a static schedule and a chunk-size of 1, the output suggests only core 0 (first iteration) and core 6 were running the code:

[C66xx_0] core 0 was initialized

[C66xx_6] core 1 was initialized

[C66xx_6] core 2 was initialized

[C66xx_6] core 3 was initialized

[C66xx_6] core 4 was initialized

[C66xx_6] core 5 was initialized

[C66xx_6] core 6 was initialized

[C66xx_6] core 7 was initialized

So although omp_get_thread_num() returns the intended result, the actual scheduling seems to be done in a dynamic way.

Is there a better way to execute code in parallel which relies on core-local resources like L2SRAM?

Our legacy code has a clearly seperated initiliazation phase (which prepares data structures for multiple parallel loops), so unfourtunatly it isn't that easy to do initialization inside of the loop :(

Thank you in advance, Clemens