While debugging a VC5502 application on a custom board, I captured input and output data in signed 32-bit integer (Int32) arrays with 7200 elements each. Then, I set a breakpoint in my code. At this point, I tried to use the dual time graph feature of CCSv5.5 to examine the input (testBufferA) and output (testBufferB) of a processing block.

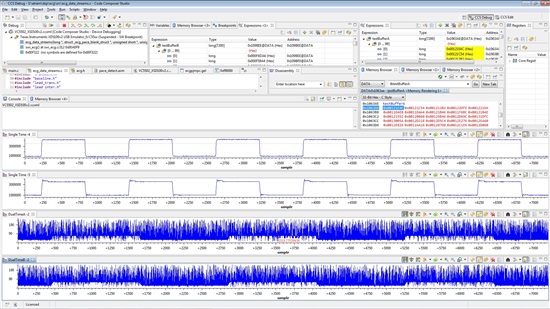

Both graphs look like they were graphed as unsigned 8-bit integer data (Uint8). See below.

Next, I used a single time graph to display the data from testBufferA. That graph looked correct.

I also used another single time graph to display the data from testBufferB. That graph looked correct too.

Here is a screen capture of my CCSv5.5 session with the goofy dual time graph on the bottom (DualTimeA-2 and DualTimeB-2), and the two single time graphs of the same two arrays (Single Time-4, Single Time-5).

Here are some details of my project:

Device: TMS320VC5502

Emulator: XDS100v2

Code Composer Studio version 5.5.0.00077 running on a Dell Precision M6800 PC running Windows 7 64-bit, Service Pack 1.

(We do not observe this behavior when we debug the same application from CCSv5.5 on Ubuntu 12.04 LTS 32-bit workstations.)

The project comes from legacy code (circa CCSv2.21) that is built from a Linux environment using the following:

TMS320C55x Code Generation Tools Release Version 2.56

DSP/BIOS 4.90.270

I created a CCSv5.5 workspace and project and imported the C/C++ Executable from the Linux build. I have been able to attach to the VC5502 target with my XDS100v2 emulator from CCSv5.5 and step through the code. We do NOT build the application from CCSv5.5. I had to modify my gel file to get past some error messages that were appearing. I am attaching my gel file. If you find issues with my gel file, I would be grateful for any guidance.