Tool/software:

Hi

I have two devices with my own project based on the Multi_role example.

One device acts as a master, and the other as a slave. On both devices, INIT_PHYPARAM_MIN_CONN_INT/INIT_PHYPARAM_MAX_CONN_INT equals 80 (i.e. 80*1.25ms = 100ms)

DEFAULT_INIT_PHY = INIT_PHY_1M

MAX_NUM_PDU = 5

MAX_PDU_SIZE = 255

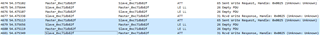

The master connects to the other device and starts sending data to the characteristic using GATT_WriteCharValue. I monitor the result of successful sending using the ATT_WRITE_REQ event in GATT. Judging by the execution time, about 165ms (+-) passes between sending and acknowledgment.

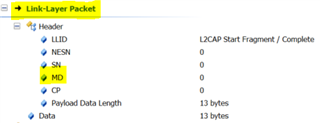

It looks like the data packet is sent on one connection interval, and the acknowledgment on another.

Having studied the information, I understand that in theory BLE should be able to send multiple packets within one connection interval. This in turn increases the exchange rate.

What actions should I take to see the speed increase?

If I decrease the value of the connection interval, the exchange rate will increase. If I use recording without confirmation, the speed will increase.

I am interested in how to increase the exchange rate by sending multiple packets within one interval (without changing the interval time and with confirmation of successful recording)