Tool/software:

Hello.

I am developing a voltage sensor that uses the Sensor Controller to continuously read the ADC, average the readings, and push the ADC values up to a fifo. The main MCU code then periodically wakes up the TI-RTOS message loop to process the Sensor Controller’s fifo data and update an OLED display with the measured data. I also push the sampled data out to our data analysis app via BLE.

My problem is that when the MCU wakes up to process the data, the transition from standby mode to active mode causes the ADC data to be skewed by one or two LSBs. I’m assuming that this is caused by the newly turned on power domains affecting the ADC’s Vref.

I tried mitigating this by preventing the power domain switch via Power_setConstraint(PowerCC26XX_SB_DISALLOW), but it only reduced the problem by about half.

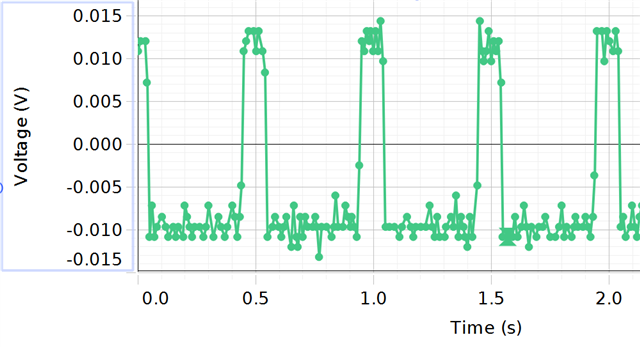

Here is a graph of the data I am seeing:

The ADC is sampling every 10us, but I am averaging every 1000 ADC reads, so each data point is 10ms apart. I process the data and update the display every 500ms and it takes ~100ms to do the processing. You can see that during the 100ms period, the ADC values are shifted up a bit. Note that I multiply the ADC sum by 16 before dividing by 1000 which makes it look like the averaged reading is from a 16-bit ADC instead of the actual 12-bit ADC, so the actual error is one or two ADC LSBs and not the 20-ish LSBs you see on the graph.

Do you have any suggestions on how to prevent this from happening?

Thank you

Scott