Hi guys,

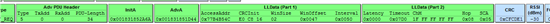

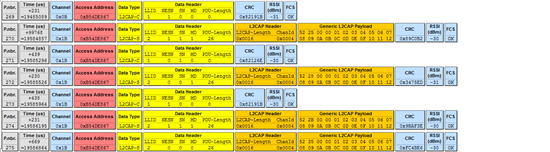

I'm writing four characteristics ,19 bytes each, from a central device to a peripheral and I changed the connection interval parameter to increase the throughput. I put it to the minimum value (7.5 ms) for both the maximum connection interval and minimum connection interval parameters. I understand that the connection interval is the time between two connection events so I'm trying to calculate it using the packet sniffer by checking the time difference between two physical channels (which correspond to two connection events) but the value I get is larger than the one I put in the code. Please have a look at the below figure. Channel 0x0B of this connection event is taking around 102 ms.

I thought it should be around 7 ms, What am I missing here?

Thanks a lot