Hello,

We've been tracking an issue in our peripheral project where the BLE connection is terminated by the central due to a MIC error. I was hoping that porting from SDK 1.6 to 2.2 for the 2642 would resolve the issue, but it still seems to be present.

The error is reproducible, but seems to occur somewhat randomly. It occurs most frequently during OTA's, when our BLE connection is under it's heaviest load. That said, I've also seen it occur during normal run-time message exchanges.

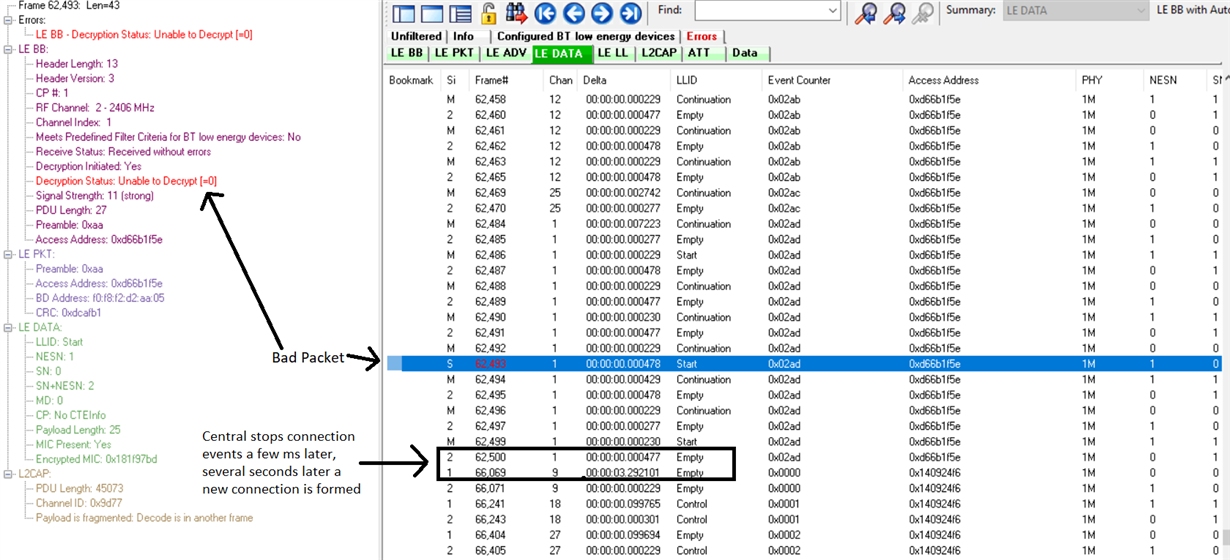

I'm not sure there's much I can do to debug this issue, since the encryption is handled by the stack / controller portion of the system. I'm highly confident the problem is in the peripheral as I've tested it against both our production-intent central (Qualcomm), and on our peripheral hardware (TI) pretending to be the central. In both cases the central reports the 0x3D error code as the reason for the disconnection. I've also captured the error on a BLE sniffer which also points at the issue coming from the peripheral. Here's a screen cap from the sniffer:

Any ideas on how we can troubleshoot this? We can limp along for development with link encryption turned of, but its a must for production.

Thanks,

Josh