Other Parts Discussed in Thread: SYSBIOS,

Tool/software: TI-RTOS

I did some searching and can't find much in the way of a good description on how to implement what I want to do here, so it seemed worth asking in the community.

I'm trying to determine how to go about using the error module to catch spurious, unexpected, and difficult to diagnose hard faults/crashes and critical errors. My goal is to be able to extract error information (file, line, error type, etc) and store this to NVS so that I may do a soft reset of the system and allow me to extract the specific error details later.

I have created a high priority new task, ErrorHandlerTask, which pends on a semaphore so will sit idle until an error occurs.

In my release.cfg I have set my error policy as follows:

Error.policyFxn = Error.policyDefault; Error.raiseHook = "&myErrorFxn";

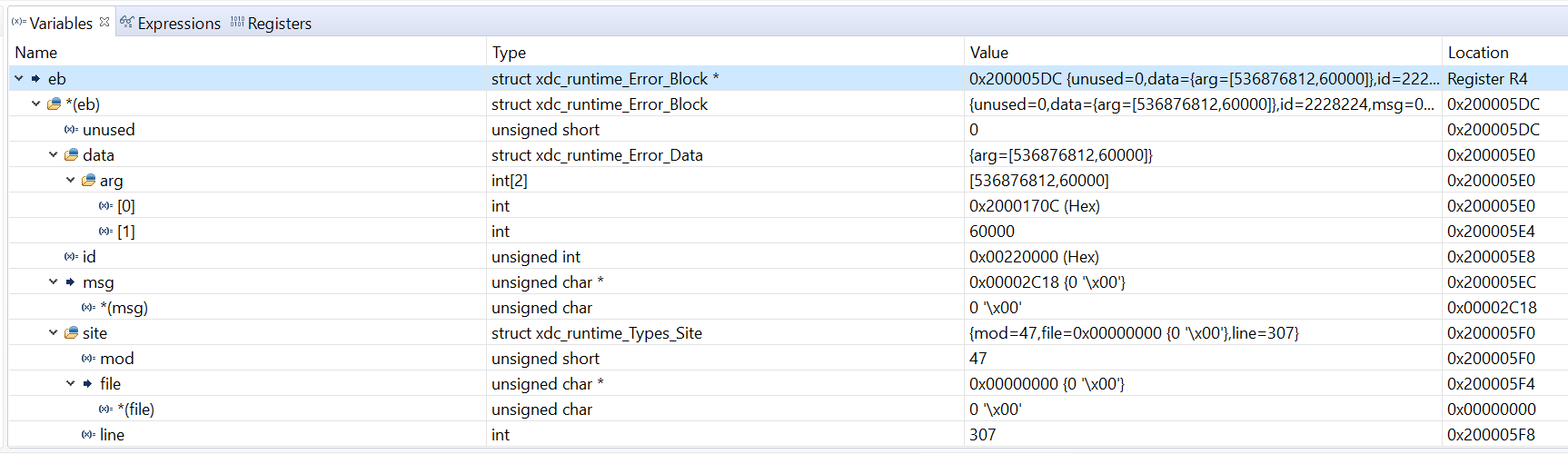

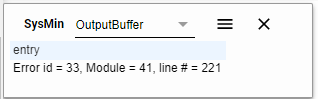

Within the same file as this task I have the myErrorFxn(Error_Block *eb). In this function I hope to extract the error information to some file global variables/structure and the post the semaphore to allow the task to process and store the details.

What I'm not sure I'm clear on is how the Error Module actually works. I've read a lot of the documentation, but it's all a bit convoluted and not very concise. As far as my understanding goes, with a the policyDefault set and my raiseHook defined, any error will trigger myErrorFxn and deliver a pointer to the Error Block. From here I can use the various error functions to extract information such as the file, line number, and error arguments. I don't use Error_Blocks in any of my functions, so I assume this is generated by the module when something like a hard fault occurs.

So my first questions:

- What type of errors will result in the error raiseHook being called?

- What information do the error arguments Arg0 and Arg1 provide?

I can find plenty of mention of them, but not much in the way of description of what they represent.

Once I have figured out how to extract and quantify the information from the Error_Block I will need to test it. So my next question:

- What methods can I use to generate a variety of genuine errors/faults which will allow me to test the functionality of this error handler?

i.e. What commonly will break TI RTOS?

I've not doubt I will have some follow-up questions, but for now I hope these will get me moving in the right direction.