Other Parts Discussed in Thread: DP83869HM, DP83869EVM, DP83869

Hi all,

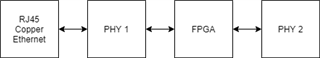

I've been doing testing with an Ixia Novus One to communicate over 1Gbps Ethernet Copper. I am running the DP83867E PHY in reverse loopback mode with auto-negotiation enabled, where the PHY is automatically configured to master.

I drop packets when issuing Random Packet or Quad Gaussian tests, even at 60% utilization. Stable sized packets result in no drops until higher percents (100% utilization 64-Byte packets drop .005%).

Implementing an ethernet switch between the PHY and traffic generator significantly improves performance. Manually configuring the PHY to be a slave instead of master slightly improves performance.

I have seen this problem on several iterations of custom platforms using the DP83867E, several iterations of custom platforms using the DP83869HM, and on the DP83869EVM Development Board.

I have tried the same tests using a Marvell 88E1111 PHY and Quad Gaussian passes at 95% utilization with no drops.

Thanks in advance,

George