Part Number: DP83867IR

Other Parts Discussed in Thread: TMDX654IDKEVM, TMDS64EVM

Tool/software:

Hi,

I'm having trouble with the link connection of the DP83867IR phy, which I think has already been documented in the latest Application Notes Troubleshooting Guide (Rev. C).

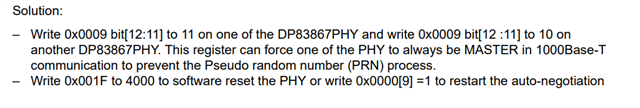

My case seems to be the one described in paragraph 3.5, third bullet point ("Read register 0x0005[15] and If 0x0005 bit[15] = 1"). I just said "seems" since I still did not manage to read register 0x0005 as suggested, but the behavior looks the same. I do have two different boards, using this PHY and having problem connecting over the network managed by this PHY.

I'm working to implement the proposed solution on the driver used by my RTOS, but I would like to have more details on the reported info. There (on the Application Notes) it says that the "PRN is not exactly random and if both DP83867 start auto-negotiation at the same time there is a possibility both DP83867 send out the exact same random seed (PRN) and result in dead lock.". This looks very similar to my setup since the problem comes more often when the boards are powered-on simultaneously. However, it is not very clear what " at the same time" means... is there any time window reference? How much is the necessary delay for not having this issue?

Thanks.

Andrea