Other Parts Discussed in Thread: SYSCONFIG

Hi,

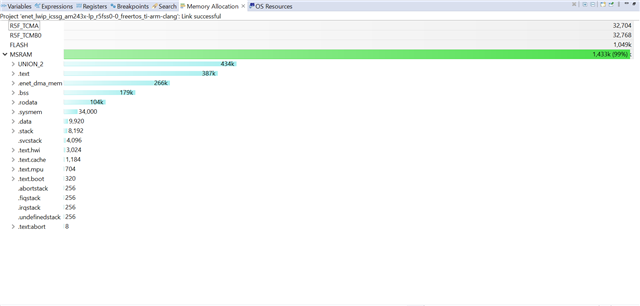

SDK : AM243x MCU+ SDK 08.05.00 , AM243x GP EVM

Example : Enet Lwip ICSSG Example

Testing the above example to verify gigabit ethernet functionality. The EVM is connected to PC via Gigabit Ethernet port.

gigabit support is enabled in sysconfig

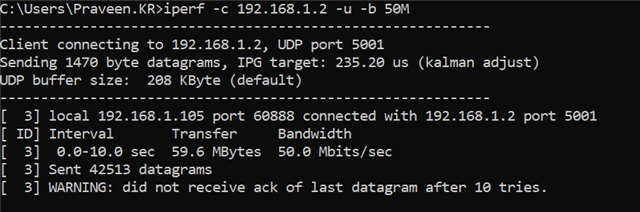

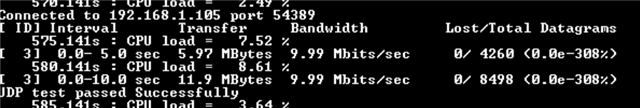

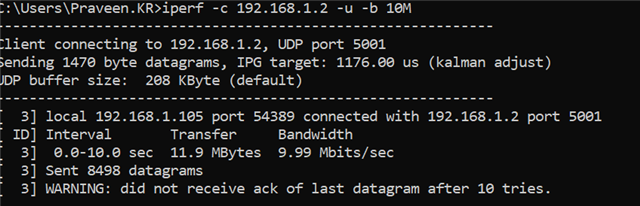

iperf results:

Bandwidth : 56.9 Mbits/sec

How to achieve gigabit speeds using the above example?