Part Number: AM2634

Hello,

I am working on a project with multiple cores configured and I am using the Spinlock module of the device to achieve managed resource access.

I use a single spinlock register like a mutex, to control the execution of a critical section of code which all four cores need execute but not parallelly. The code in all four cores is identical and is this:

#if(CORE_ID == CSL_CORE_ID_R5FSS0_0)

/* Unlock the spinlock register to reset it */

Spinlock_unlock(CSL_SPINLOCK0_BASE, INIT_ZERO_MUTEX);

#endif

for (;;)

{

int32_t status = Spinlock_lock(CSL_SPINLOCK0_BASE, INIT_ZERO_MUTEX);

if (status == SPINLOCK_LOCK_STATUS_FREE)

{

break;

}

}

/* Critical section start */

/* This function shall be used to init driver pre-init level elements*/

sysm_init_zero();

/* Critical section end */

Spinlock_unlock(CSL_SPINLOCK0_BASE, INIT_ZERO_MUTEX);

The problem is that when I put a breakpoint at the break statement, in each of the four cores I see multiple cores hitting that breakpoint.

My test sequence is:

- Reset all cores

- Reload the program to all cores

- Resume on all cores with the breakpoint enabled in each of them

In most occasions, more than one breakpoints are hit, meaning that more than one cores have seemingly succeeded in reading a SPINLOCK_LOCK_STATUS_FREE status in the same Lock Register (INIT_ZERO_MUTEX which is defined as 0).

The only configuration that seems to perform more predictably is the one below, where there is an additional Spinlock_lock call in the beginning of this sequence to ensure that the spinlock is locked before any core get to the busy waiting stage. I have tested this multiple times and it always, so far, follows the predicted pattern of hitting the break statement breakpoint in the various cores in sequence and only after the previous core called Spinlock_unlock.

Spinlock_lock(CSL_SPINLOCK0_BASE, INIT_ZERO_MUTEX);

#if(CORE_ID == CSL_CORE_ID_R5FSS0_0)

/* Unlock the spinlock register to reset it */

Spinlock_unlock(CSL_SPINLOCK0_BASE, INIT_ZERO_MUTEX);

#endif

for (;;)

{

int32_t status = Spinlock_lock(CSL_SPINLOCK0_BASE, INIT_ZERO_MUTEX);

if (status == SPINLOCK_LOCK_STATUS_FREE)

{

break;

}

}

/* Critical section start */

/* This function shall be used to init driver pre-init level elements*/

sysm_init_zero();

/* Critical section end */

Spinlock_unlock(CSL_SPINLOCK0_BASE, INIT_ZERO_MUTEX);

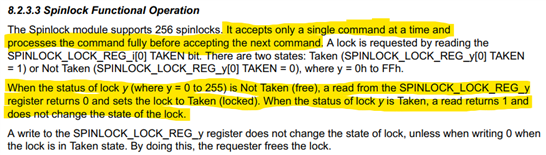

The design for the above mutual exclusion mechanism has been based on these statements in the TRM:

I interpreted this statement as:

Only a single resource (one of the cores in this particular case) can read the same lock register at any given time and then in any sequential read the register will return TAKEN (1).

However, what I observe indicates either of these scenarios:

- Two or more cores read the same lock register simultaneously and return a status of 0 (FREE), or

- A core is reading the lock register but the lock register is not set to one before another core gets access to it and therefore it is possible that two cores can read a status of FREE without writing 0 to the register.

Questions:

- Is my interpretation of how the Spinlock module works correct?

- Is there anything else that I might be missing about this module and/or my design?

- Is my improved configuration more correct for some reason and can it be trusted that it will always behave according to the design?

Thanks,

Manos