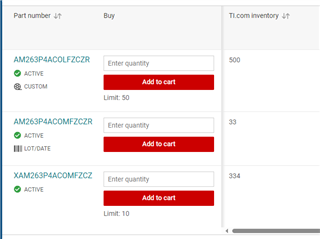

Part Number: MCU-PLUS-SDK-AM263X

Other Parts Discussed in Thread: UNIFLASH, AM263P4, AM2634

Tool/software:

Hi

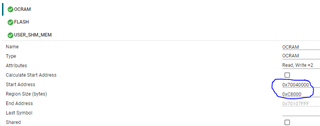

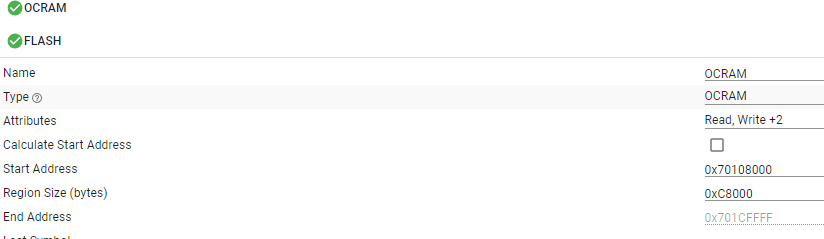

How OCRAM memory regions should be defined for lockstep case?

From the documentation I understood that TCM doubles and if there is no image for 2nd CPU, the qspi sbl will switch to lockstep mode automatically.

Does in this case both CPU's in lockstep have the same OCRAM zone?

Checked the example which shows the lockstep error mechanism: CCM but the OCRAM is not shared and the targetconfigs seems to be incomplete (at least no secondary or other flags are set anywhere, all four cores are active).

Is there a better example or application note which shows the RAM zones when CPU's are running in lockstep?

External memory is a topic as well since we might be forced to use the GPMC.

Best regards,

Barna Cs.