Other Parts Discussed in Thread: MSPM0G3507, , UNIFLASH, SYSCONFIG

Tool/software:

Hi TI Expert,

I am working on a project based on uart_echo_LP_MSPM0G3507_freertos_ticlang, but I have changed the target device to MSPM0G1507 and updated the linker command file to mspm0g1507.cmd.

Project Details:

- Device: MSPM0G1507

- SDK Version: 2.1.0.03

Objectives:

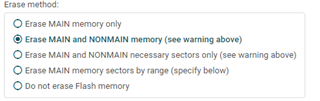

- Change the BSL UART baud rate to 115200 (using pins PA10 and PA11).

- Disable SWD in production while ensuring the BSL update function remains available.

My Current Understanding of BSL:

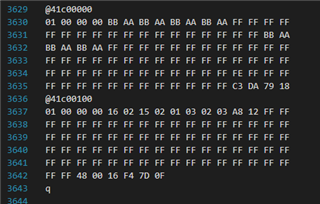

- My project (using the default mspm0g1507.cmd) can already be updated using the BSL_GUI_EXE tool.

- The internal boot ROM runs first and utilizes BCR and BSL config settings, which can be configured. It may also reference the .vtable, though I am uncertain.

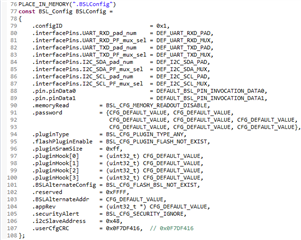

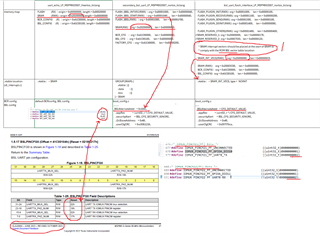

- BSL-related example projects contain boot_config.c, which defines BCR and BSL configurations, and each project has different memory layouts in the linker file. check the figure attached

- BCR configuration has an option to enable or disable SWD.

- The internal boot ROM determines whether to execute the built-in BSL or use a BSL plugin.

- The BSL plugin has an Init function, which can be used to configure the UART baud rate.

- The BSL configuration allows specifying a secondary bootloader, which can bypass the built-in BSL.

Questions:

- Does the boot ROM run completely independently by default? If not, does it rely on .vtable? If it does, how does it access the vector table, considering different projects place .vtable at different locations?

- Why do different projects have different SRAM start addresses (

0x20200000vs.0x20000000)? What determines this? - To change the BSL UART baud rate, do I need to use the plugin Init function? If so, do I also need to provide the Send, Receive, and Deinit functions?

- How should I modify my linker command file (

.cmd) to incorporate the above requirements?

I would greatly appreciate your insights on these questions.

Best regards,