Tool/software:

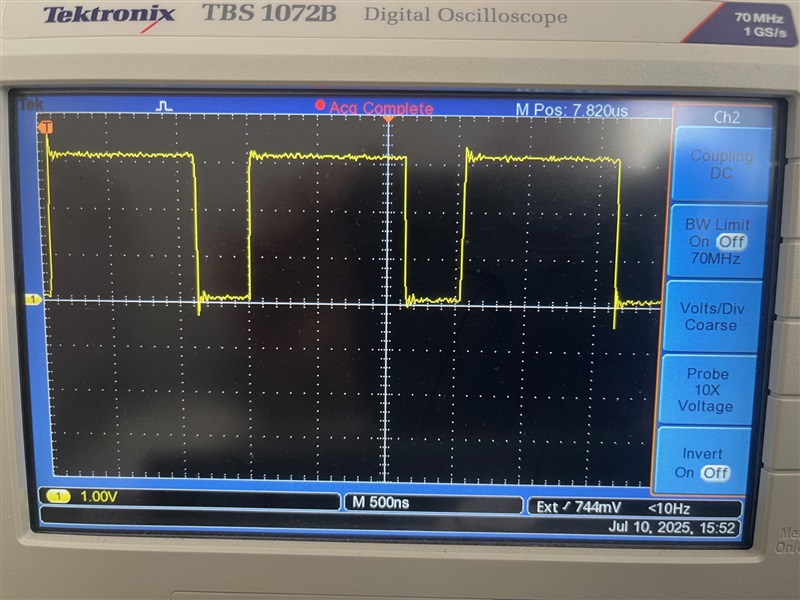

I have functioning correctly simultaneous ADCs converting on a GPIO trigger, positive edge. All is good.

I write to pin CCK and this starts the conversion.

I want to know when it is finished before I initiate the next conversion....this just hangs:

while(1) {

}

Any clues please ?