Tool/software:

Hello TI Experts,

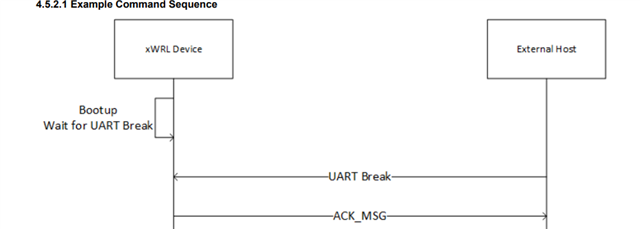

I am working on the G3507 board and need to generate a UART BREAK signal.

As I understand, the normal UART TX idles at 3.3V, so to generate a break I need to drive the TX line low for a certain duration (around 50 ms).

My intended approach is:

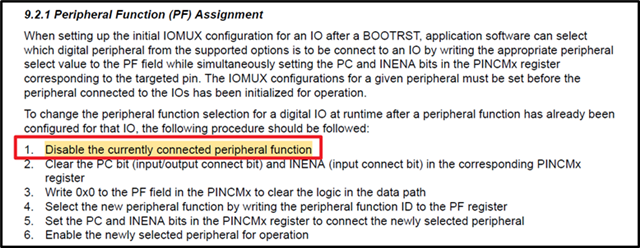

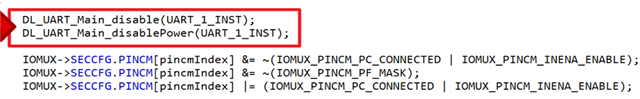

-

Disable the UART peripheral.

-

Reconfigure the UART TX pin as a GPIO output and drive it low.

-

Maintain the low state for ~50 ms.

-

Reconfigure the pin back to its default UART functionality and re-enable UART.

Could you please share example code or the recommended method to achieve this?

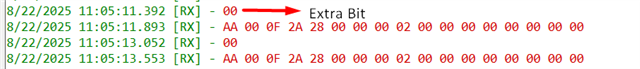

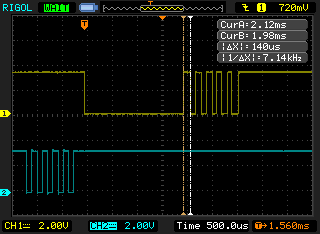

Where I need this I attached below, please refer

Thanks & Regards,

Amara Rakesh