Part Number: DK-TM4C129X

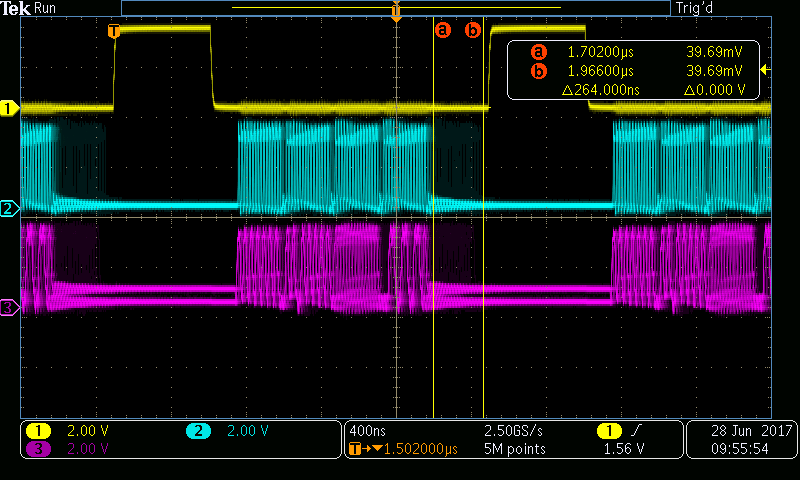

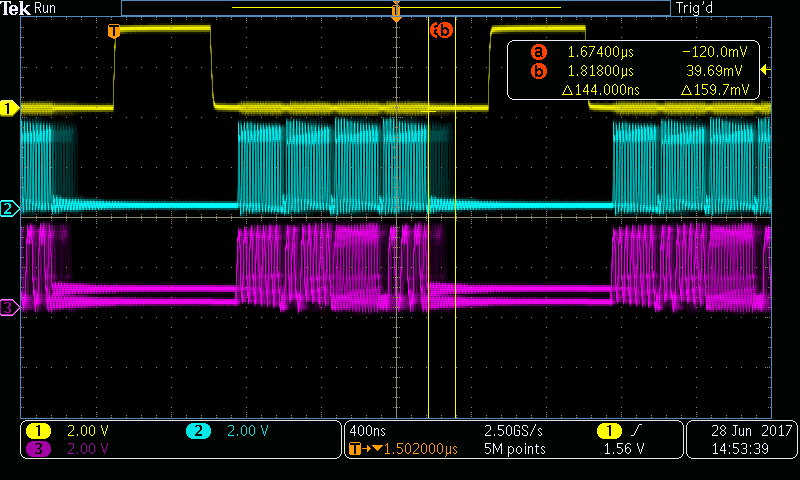

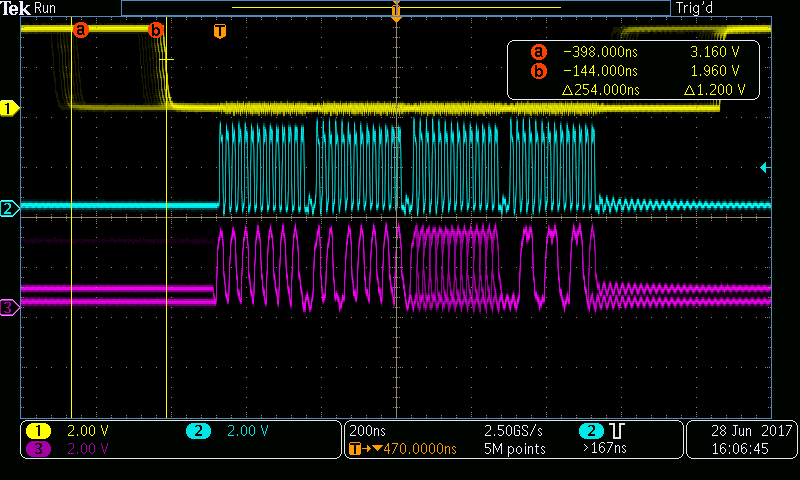

I have an application which keeps a set of scatter-gather µDMA's continuously busy with about 30 Mbits/s. The DMA's seem to function properly. When I turn on transmission of the 30 Mbits/s via the TI-RTOS stack over the Ethernet, the DMA's experience an increase in jitter of about 400 ns. This is tolerable in my design, but not desirable, since it is a performance-limiting factor. In an attempt to minimize jitter caused by Ethernet operation, I set

HWREG(EMAC0_BASE+EMAC_O_DMABUSMOD) = BFM(HWREG(EMAC0_BASE+EMAC_O_DMABUSMOD), 1, EMAC_DMABUSMOD_PBL_M);

HWREG(EMAC0_BASE+EMAC_O_DMABUSMOD) |= EMAC_DMABUSMOD_FB

"BFM" sets the value of the PBL field to 1.

With this setting, I get the 400 ns of jitter. I haven't yet looked at the stack source to see how these fields are treated, perhaps being overloaded frequently and nullifying my effort.

What is the DMA priority of the EMAC, relative to the µDMA? Can it be set lower than the µDMA?