-

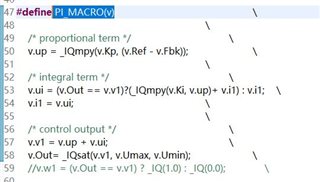

As for the calculation cycle occupied by PI, the official routine is

-

The occupied calculation time is

- As shown in the figure, a single PI calculation takes a total of 50 calculation cycles. In FPU, the calculation of saturation function takes about 40 calculation cycles. How can this be done?

-

Ask a related question

What is a related question?A related question is a question created from another question. When the related question is created, it will be automatically linked to the original question.