Hi Team,

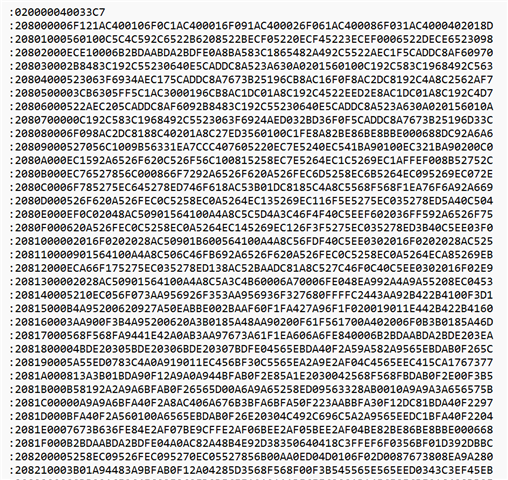

1) When the program generates the hex file through CCS, all programs are stacked together (see the hex file below). How does the processor distinguish among these data which are 16-bit instructions and which are 32-bit instructions?

2) In CCS, the PC pointer executes one instruction at a time. How does the processor tell the number of bits of the instruction being executed? Is there a flag bit?

Could you help check this case? Thanks.

Best Regards,

Cherry