Other Parts Discussed in Thread: MSP430FR6041, MSP430WARE, MSP430FR6043, MSP430FR5043, MSP430FR5041

Hey !

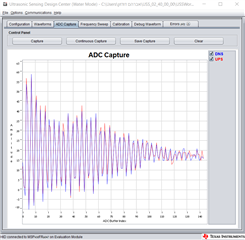

I tried to get good results for low flow measurement.

So I started the measurement when there is no water flow at all in the pipe, these are the results:

1) Why didn't I get a uniform value of zero?

2) How do I reduce the spikes that appeared in the "volume flow rate", it should be at a lower resolution