Other Parts Discussed in Thread: MSP-FET

Tool/software:

Hello,

I want to test if my device is entering BSL mode correctly and if the sequence I use is correct in general.

I have added this piece of code in my application:

sc_stop(); Turns off ADC

__disable_interrupt(); // disable interrupts

((void (*)())0x1000)(); // jump to BSLThen I want to use MSF_FET in I2C mode and try to access BSL with following script:

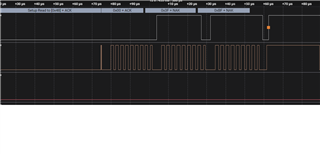

LOG MODE FRxx I2C 100000 COM10 DELAY 2000 //gives wrong password to do mass erase RX_PASSWORD pass32_wrong.txt // //add delay after giving wrong password //because the device does not give //any response after wrong password applied // DELAY 2000 RX_PASSWORD pass32_default.txt

I connected MSP_FET's Backend UART pins to my Device's I2C. Also connected ground and VCC target.

I'm not getting ACK response on I2C line.

Is my sequence correct or am I doing something wrong ?