Tool/software:

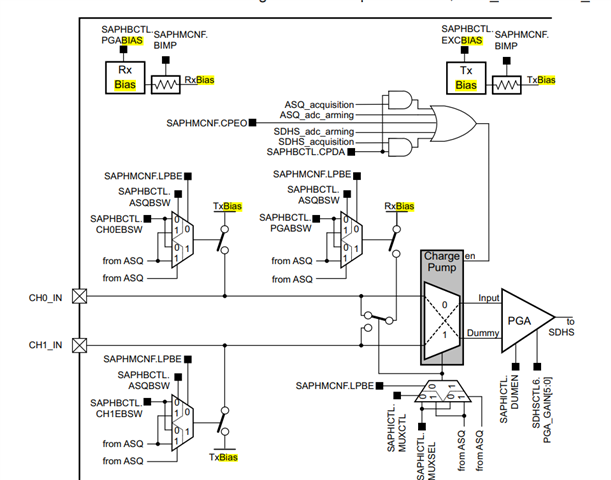

From the diagram, I would like to understand the importance of the Rx Bias and Tx Bias voltages.

-

Why is biasing needed, especially when the PGA input is limited to a maximum of 800 mVpp?

-

My understanding is that adding a bias might cause the input to exceed this 800 mVpp limit, depending on signal swing. Should I be concerned about this?

-

Do I need to carefully consider both Rx and Tx bias settings, or are they internally isolated such that they don’t affect each other directly?

Could you please help clarify how biasing interacts with PGA input range and what precautions should be taken in setting these voltages to ensure the signal remains within allowed limits?