I already asked this on StackOverflow, but actually I think this is surely a much better place to ask.

I'm more of a high level software guy but have been working (experimenting!) on some embedded projects lately so I'm sure there's something obvious I'm missing here, though I have spent over a week trying to debug this and every 'MSP' related link in google is purple at this point...

I currently have an MSP430F5529 set up as an I2C slave device whose only responsibility currently is to receive packets from a master device. The master uses industry grade I2C and has been heavily tested and ruled out as the source of my problem here. I'm using Code composer as my IDE using the TI v15.12.3.LTS compiler. The master has 1k5 pullups on the I2C lines.

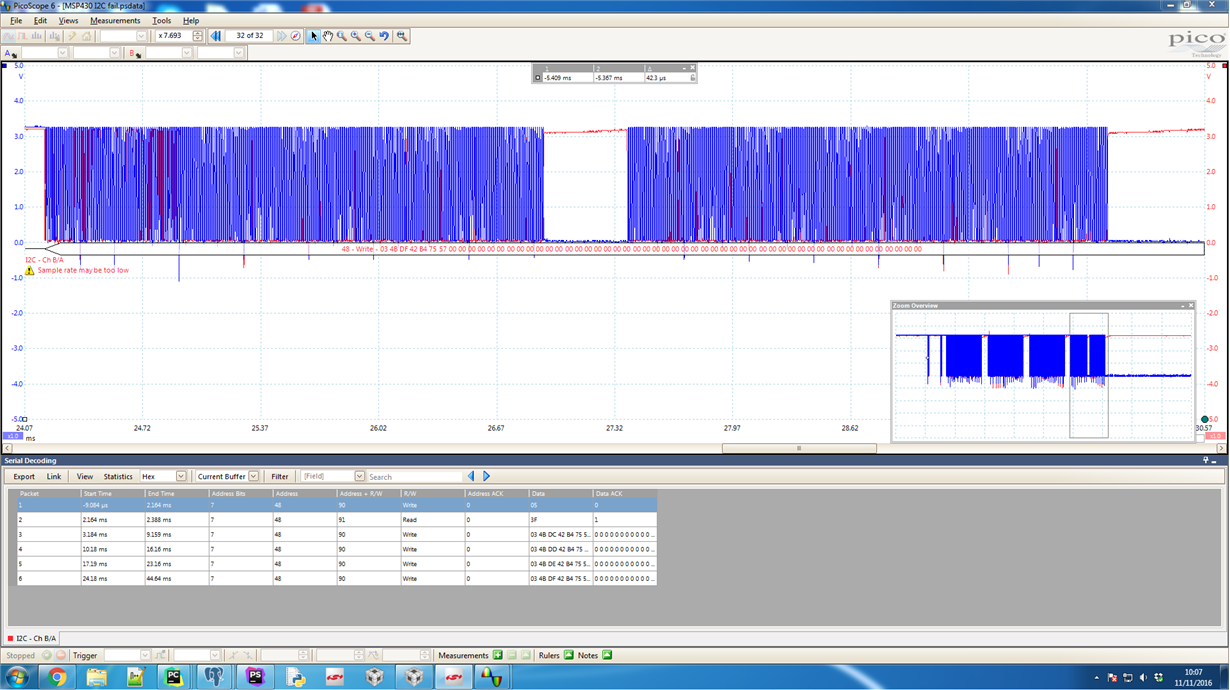

What is currently happening is the master queries how many packets (of size 62 bytes) the slave can hold, then sends over a few packets which the MSP is just currently discarding. This is happening every 100ms on the master side and for the minimal example below the MSP will always just send back 63 when asked how many packets it can hold. I have tested the master with a Total Phase Aardvark and everything is working fine with that so I'm sure it's a problem on the MSP side. The problem is as follows:

The program will work for 15-20 minutes, sending over tens of thousands of packets. At some point however the slave starts to hold the clock line low and when paused in debug mode, is shown to be stuck in the start interrupt. The same sequence of events is happening every single time to cause this.

1) Master queries how many packets the MSP can hold.

2) A packet is sent successfully

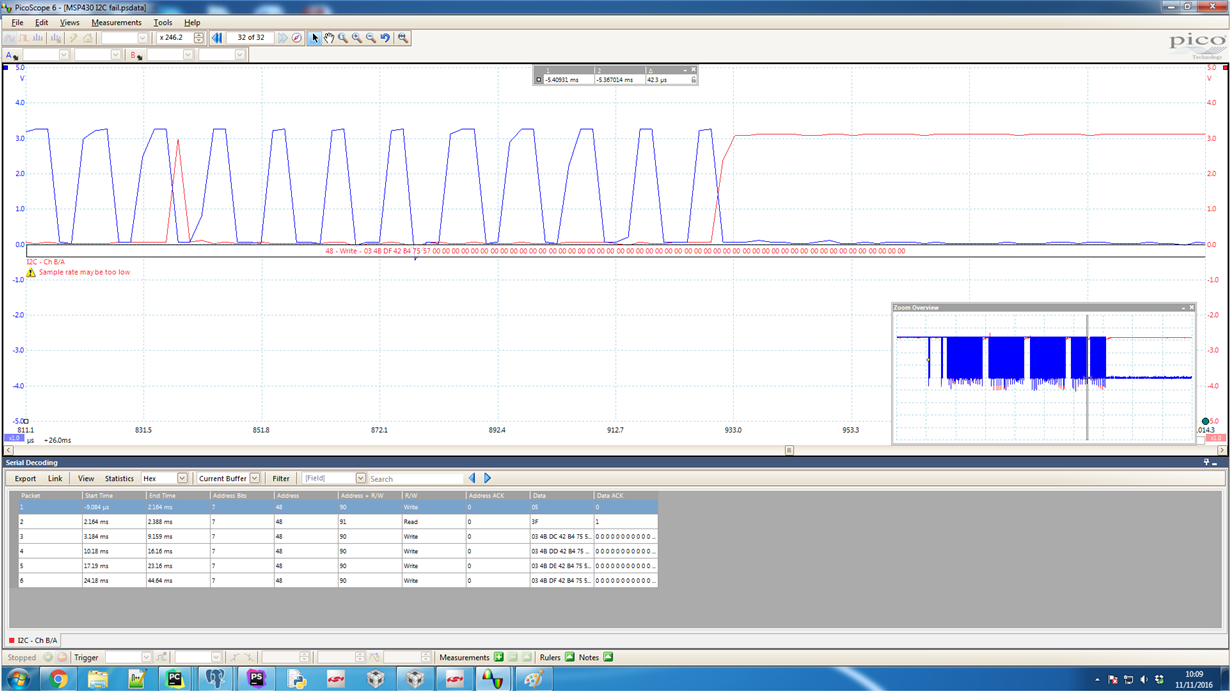

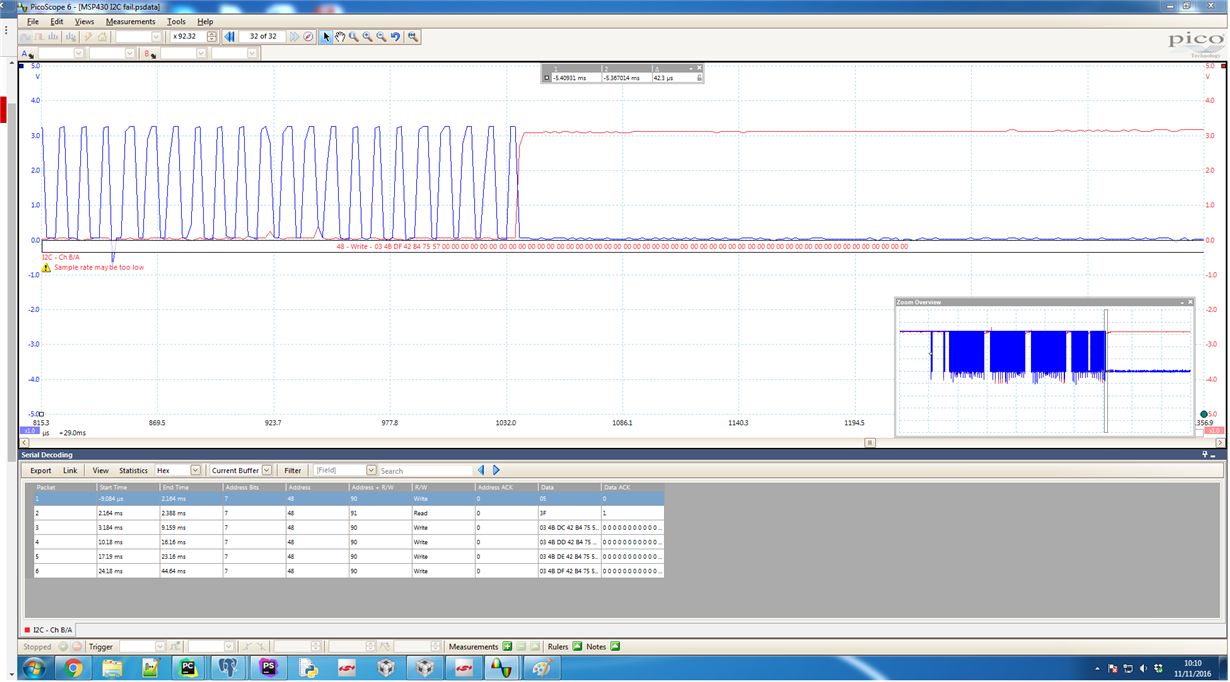

3) Another packet is attempted but < 62 bytes are received by the MSP (counted by logging how many Rx interrupts I receive). No stop condition is sent so master times out.

4) Another packet is attempted. A single byte is sent before the stop condition is sent.

5) Another packet is attempted to be sent. A start interrupt, then a Tx interrupt happens and the device hangs.

Ignoring the fact that I'm not handling the timeout errors on the master side, something very strange is happening to cause that sequence of events, but that's what happens every single time.

Below is the minimal working example which is reproducing the problem. My particular concern is with the SetUpRx and SetUpTx functions. The examples that the Code Composer Resource Explorer gives only has examples of Rx or Tx, I'm not sure if I'm combining them in the right way. I also tried removing the SetUpRx completely, putting the device into transmit mode and replacing all calls to SetUpTx/Rx with mode = TX_MODE/RX_MODE, which did work but still eventually holds the clock line low. Ultimately I'm not 100% sure on how to set this up to receive both Rx and Tx requests.

#include "driverlib.h"

#define SLAVE_ADDRESS (0x48)

// During main loop, set mode to either RX_MODE or TX_MODE

// When I2C is finished, OR mode with I2C_DONE, hence upon exit mdoe will be one of I2C_RX_DONE or I2C_TX_DONE

#define RX_MODE (0x01)

#define TX_MODE (0x02)

#define I2C_DONE (0x04)

#define I2C_RX_DONE (RX_MODE | I2C_DONE)

#define I2C_TX_DONE (TX_MODE | I2C_DONE)

/**

* I2C message ids

*/

#define MESSAGE_ADD_PACKET (3)

#define MESSAGE_GET_NUM_SLOTS (5)

static volatile uint8_t mode = RX_MODE; // current mode, TX or RX

static volatile uint8_t rx_buff[64] = {0}; // where rx data is written

static volatile uint8_t* rx_data = rx_buff; // pointer to next rx byte write location

static volatile uint8_t tx_len = 0; // number of bytes to reply with

static inline void SetUpRx(void) {

// Specify receive mode

USCI_B_I2C_setMode(USCI_B0_BASE, USCI_B_I2C_RECEIVE_MODE);

// Enable I2C Module to start operations

USCI_B_I2C_enable(USCI_B0_BASE);

// Enable interrupts

USCI_B_I2C_clearInterrupt(USCI_B0_BASE, USCI_B_I2C_TRANSMIT_INTERRUPT);

USCI_B_I2C_enableInterrupt(USCI_B0_BASE, USCI_B_I2C_START_INTERRUPT + USCI_B_I2C_RECEIVE_INTERRUPT + USCI_B_I2C_STOP_INTERRUPT);

mode = RX_MODE;

}

static inline void SetUpTx(void) {

//Set in transmit mode

USCI_B_I2C_setMode(USCI_B0_BASE, USCI_B_I2C_TRANSMIT_MODE);

//Enable I2C Module to start operations

USCI_B_I2C_enable(USCI_B0_BASE);

//Enable master trasmit interrupt

USCI_B_I2C_clearInterrupt(USCI_B0_BASE, USCI_B_I2C_RECEIVE_INTERRUPT);

USCI_B_I2C_enableInterrupt(USCI_B0_BASE, USCI_B_I2C_START_INTERRUPT + USCI_B_I2C_TRANSMIT_INTERRUPT + USCI_B_I2C_STOP_INTERRUPT);

mode = TX_MODE;

}

/**

* Parse the incoming message and set up the tx_data pointer and tx_len for I2C reply

*

* In most cases, tx_buff is filled with data as the replies that require it either aren't used frequently or use few bytes.

* Straight pointer assignment is likely better but that means everything will have to be volatile which seems overkill for this

*/

static void DecodeRx(void) {

static uint8_t message_id = 0;

message_id = (*rx_buff);

rx_data = rx_buff;

switch (message_id) {

case MESSAGE_ADD_PACKET: // Add some data...

// do nothing for now

tx_len = 0;

break;

case MESSAGE_GET_NUM_SLOTS: // How many packets can we send to device

tx_len = 1;

break;

default:

tx_len = 0;

break;

}

}

void main(void) {

//Stop WDT

WDT_A_hold(WDT_A_BASE);

//Assign I2C pins to USCI_B0

GPIO_setAsPeripheralModuleFunctionInputPin(GPIO_PORT_P3, GPIO_PIN0 + GPIO_PIN1);

//Initialize I2C as a slave device

USCI_B_I2C_initSlave(USCI_B0_BASE, SLAVE_ADDRESS);

// go into listening mode

SetUpRx();

while(1) {

__bis_SR_register(LPM4_bits + GIE);

// Message received over I2C, check if we have anything to transmit

switch (mode) {

case I2C_RX_DONE:

DecodeRx();

if (tx_len > 0) {

// start a reply

SetUpTx();

} else {

// nothing to do, back to listening

mode = RX_MODE;

}

break;

case I2C_TX_DONE:

// go back to listening

SetUpRx();

break;

default:

break;

}

}

}

/**

* I2C interrupt routine

*/

#pragma vector=USCI_B0_VECTOR

__interrupt void USCI_B0_ISR(void) {

switch(__even_in_range(UCB0IV,12)) {

case USCI_I2C_UCSTTIFG:

break;

case USCI_I2C_UCRXIFG:

*rx_data = USCI_B_I2C_slaveGetData(USCI_B0_BASE);

++rx_data;

break;

case USCI_I2C_UCTXIFG:

if (tx_len > 0) {

USCI_B_I2C_slavePutData(USCI_B0_BASE, 63);

--tx_len;

}

break;

case USCI_I2C_UCSTPIFG:

// OR'ing mode will let it be flagged in the main loop

mode |= I2C_DONE;

__bic_SR_register_on_exit(LPM4_bits);

break;

}

}

Any help on this would be much appreciated and if you need any more information please ask.

Thank you!